Google releases Gemini. Is it better than GPT-4? - Weekly Roundup - Issue #444

Plus: new AI Alliance; Elon Musk seeks $1B in funding for xAI; humanoid robots could cost $3/hour; tiny robots made from human cells; and more!

Welcome to Weekly Roundup Issue #444. The main story of this week is the release of Gemini, Google’s long-awaited response to OpenAI’s GPT-4. We will go through the technical document and other materials released by Google as well as interviews to get a better understanding of what Gemini is capable of and to answer the question in the heading - is it better than GPT-4?

In other AI news, IBM, Meta, and 50 other organizations have launched the AI Alliance. Elon Musk is seeking $1B in funding for xAI, and Mistral AI, a company less than a year old, is now valued at $2B. In the field of robotics, Agility Robotics’ CEO anticipates that their humanoid robot Digit will eventually operate at a cost of $3 per hour, while Anduril has released an autonomous combat jet drone. Meanwhile, at the intersection of robotics and biology, researchers have developed anthrobots, tiny robots made from human cells.

Google releases Gemini. Is it better than GPT-4?

It has finally happened. Gemini, Google’s long-awaited response to OpenAI’s GPT-4, first teased six months ago at the Google I/O conference, is here.

Gemini is not a single model but a family of models that comes in three sizes - Nano, Pro and Ultra.

Gemini Nano is the smallest model in the Gemini family. Nano is designed to run on-board mobile devices and it comes in two versions - Nano-1 with 1.8B parameters and Nano-2 with 3.25B parameters, targeting low- and high-memory devices respectively.

The second model in the Gemini family is Gemini Pro. You can think of it as an equivalent to OpenAI’s GPT-3.5 which powers the free version of ChatGPT. Gemini Pro is optimised for performance and speed.

Gemini Ultra is the biggest and most capable model from the Gemini family. As Google describes it, Gemini Ultra is the “most capable model that delivers state-of-the-art performance across a wide range of highly complex tasks, including reasoning and multimodal tasks”. Gemini Ultra is a direct competitor to GPT-4.

Google did not disclose how big Gemini Pro and Ultra are.

All Gemini models can support a 32k token context window, meaning Gemini can take about 24,000 words or 87 pages of text. For comparison, the new GPT-4 Turbo has a 128k token context window (about 100,000 words, equivalent to 300 pages of a standard book) and Anthropic’s Claude 2.1 can take in 200K tokens (about 150,000 words, or over 500 pages of text).

How does Gemini compare to GPT-4?

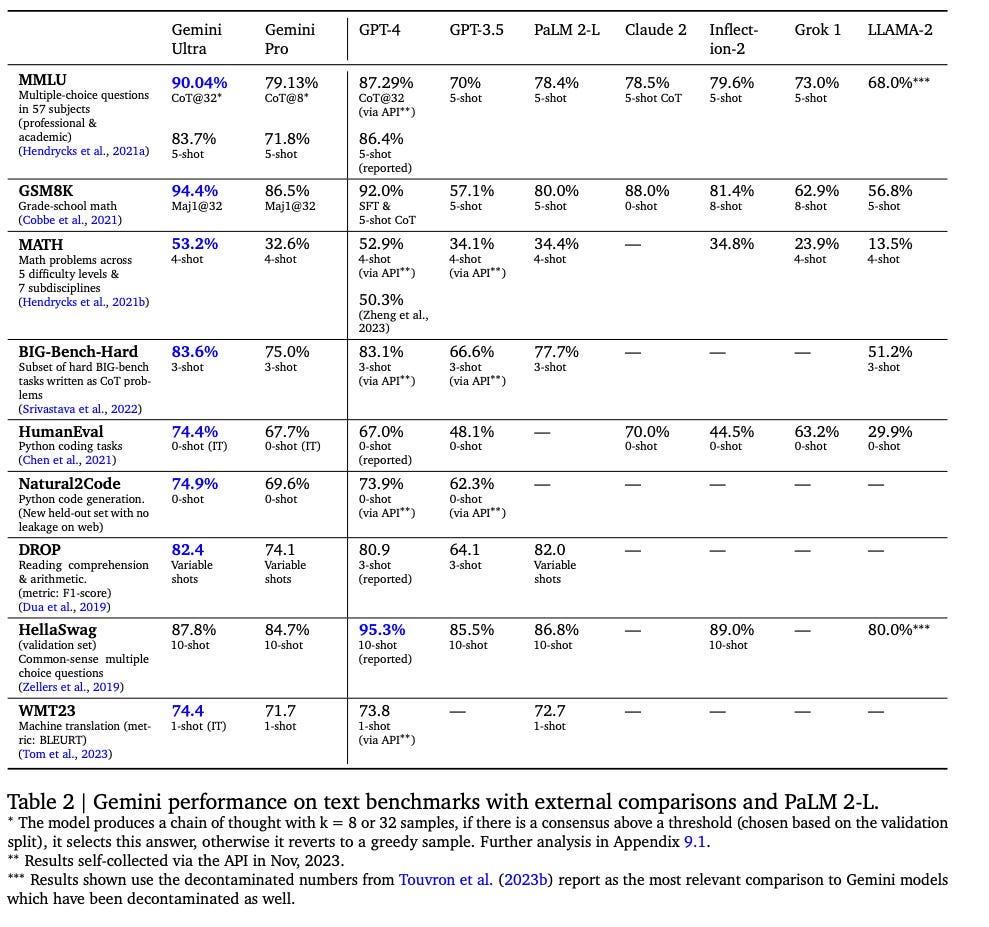

Now that we know what Gemini models are, the next question is how good are they. The short answer is that, according to metrics provided by Google in the Gemini technical report paper, Gemini Ultra and Pro are at least at the same level as what OpenAI has to offer.

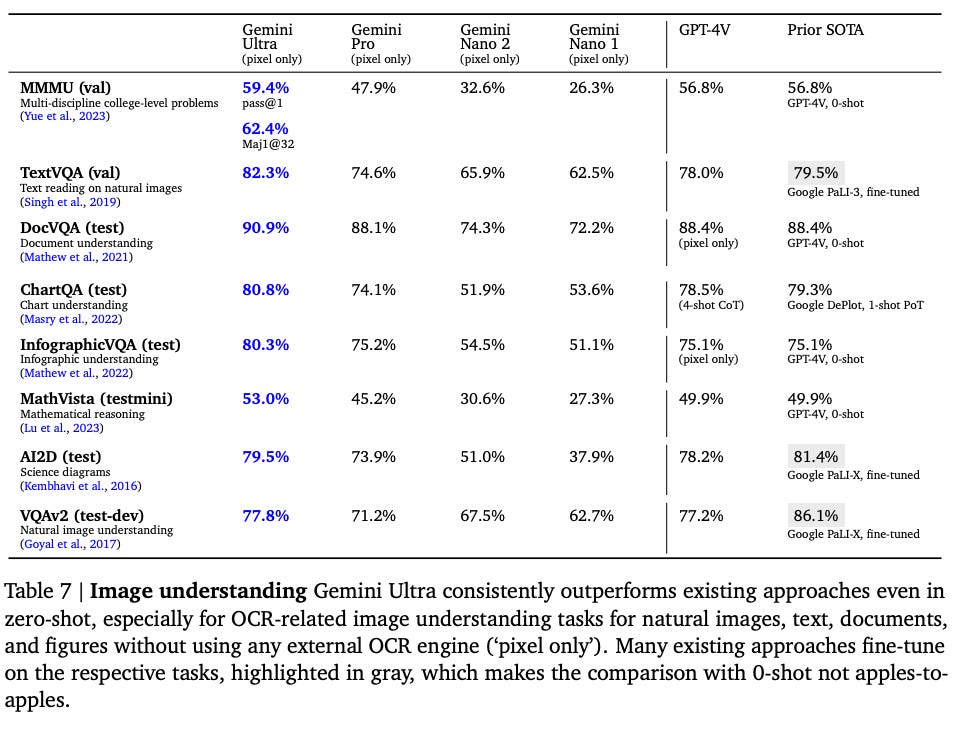

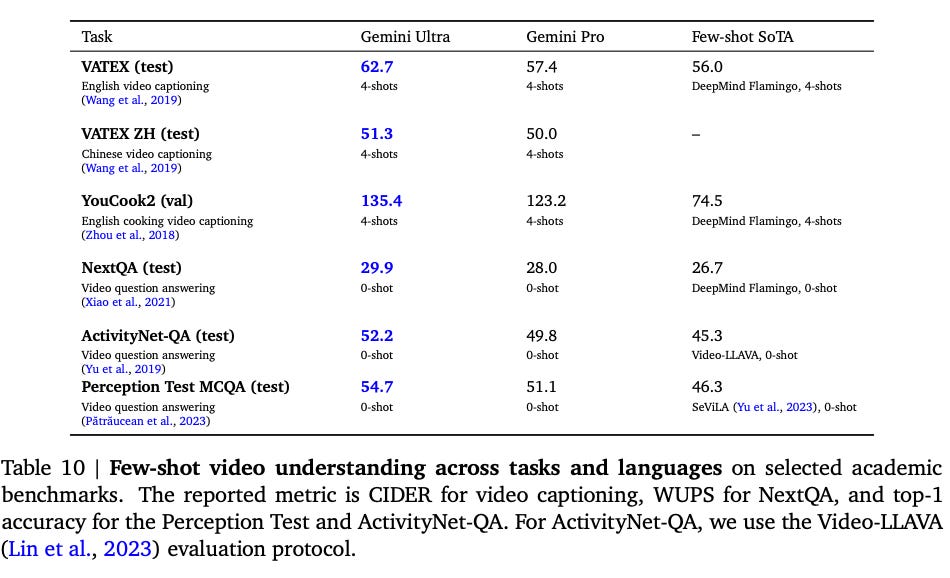

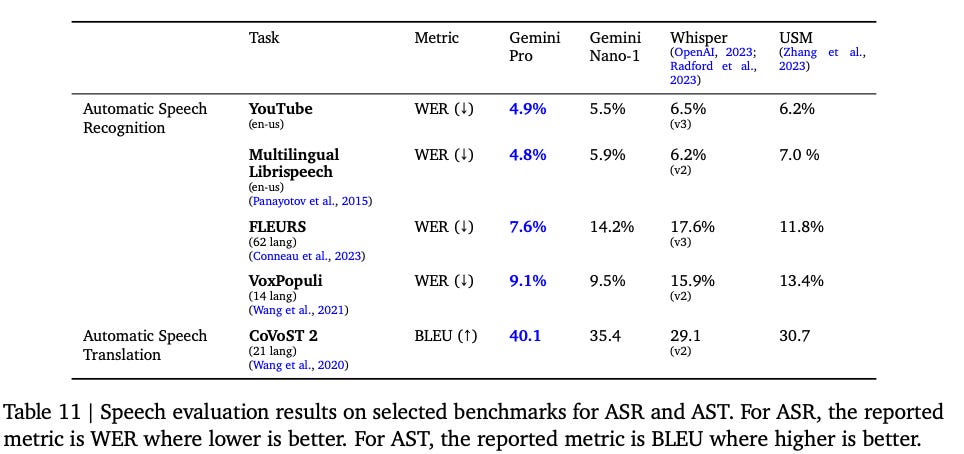

As Google engineers write in the technical report, Gemini Ultra “achieves new state-of-the-art results in 30 of 32 benchmarks we report on, including 10 of 12 popular text and reasoning benchmarks, 9 of 9 image understanding benchmarks, 6 of 6 video understanding benchmarks, and 5 of 5 speech recognition and speech translation benchmarks.”

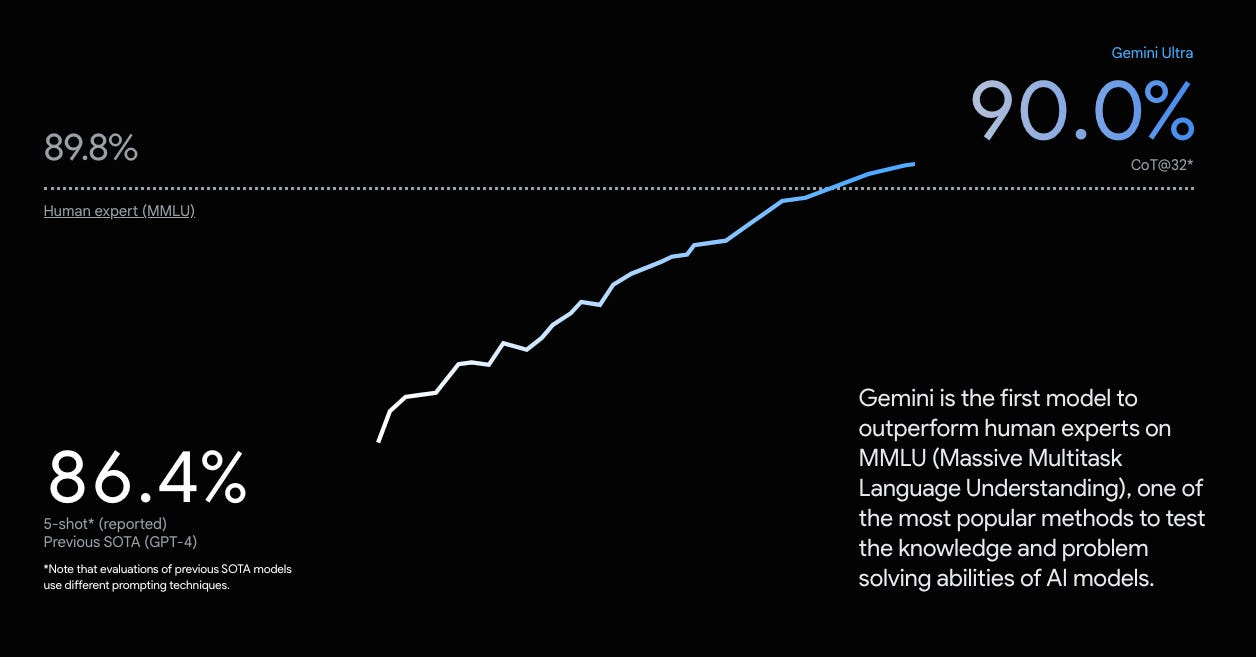

A note on the MMLU test results at the top of the table: the paper presents two sets of numbers for these results - one set from Chain-of-Thought (CoT) prompting and another from 5-shot learning, where the model uses five examples for guidance. It's important to highlight that this doesn't provide a direct one-to-one comparison. Google has taken some creative liberty in the interpretation of these results to promote Gemini and mixed CoT results with 5-shot prompting to make Gemini Ultra look better. The table above has more reasonable numbers.

There are also some problems with the MMLU test itself and its results should not be read as Gemini Ultra, GPT-4 or other models are better than expert humans.

It is a bit disappointing that Google resolved to cherry-pick test results because Gemini is genuinely good, at least according to results reported by Google engineers. It is the new state-of-the-art in image understanding…

… video understanding…

… and speech understanding.

Overall, the Gemini family of models looks very good. In many areas, it marks a new state-of-the-art for large language models.

You can find more detailed test results in the Gemini: A Family of Highly Capable Multimodal Models paper.

Multimodality from ground-up

Unlike other multimodal models, Gemini has been designed and trained with multimodality in mind from the very beginning. Gemini can seamlessly combine inputs from text, images, video, audio and code.

It’s worth noting that Gemini models are natively multimodal. It means that the models take text, images, video and audio as they are. For example, it is common for audio inputs to be transcribed into text and then fed into the model. Gemini does not do that, which preserves valuable information that could have been lost during the translation.

This native multimodality also improved the overall performance of Gemini models. Researchers note that training Gemini across text, image, audio, and video resulted in Gemini producing better responses, even beating models specialising in a given modality, like speech recognition.

Availability

Gemini Nano is available on Google Pixel 8 Pro where it can summarise text and prepare responses to text messages.

Gemini Pro is now available in Google Bard and is available in over 170 countries. However, if you live in the EU or the UK, you will have to wait as due to regulatory reasons, Gemini Pro is not available there yet. Starting on December 13, developers and enterprise customers can access Gemini Pro via the Gemini API in Google AI Studio or Google Cloud Vertex AI. Currently, Gemini Pro can only understand text and code and we have to wait for the full multimodality to be available.

Gemini Ultra is not available yet and is planned to be released early next year, once the final fine-tuning and reinforcement learning from human feedback stages are complete. Just like with Gemini Pro, Gemini Ultra will be available via Google API. Gemini Ultra will also be available via Bard Advanced early next year, which looks like a service similar to ChatGPT Plus.

What’s next?

“Welcome to the Gemini era”, announces the page promoting Google's newest and most advanced AI model. “AI is a profound platform shift, bigger than web or mobile. And so it represents a big step for us from that moment as well,” said Sundar Pichai in an interview with MIT Technology Review.

Google's recent showcase is quite impressive, potentially setting a new benchmark or at least rivalling the best AI models currently available. However, a definitive comparison with GPT-4 will have to wait until the early release of Gemini Ultra next year.

Google is back and the AI race just got crazier.

One thing that went under the radar was the release of AlphaCode 2 alongside Gemini. I will take a closer look at the AlphaCode 2 technical paper on Monday as it has some interesting things that echo OpenAI’s research on Q* and hints at what the top AI labs are working on. Don’t miss out on this analysis – make sure to subscribe to the Humanity Redefined newsletter to be notified when that analysis is out.

If you enjoy this post, please click the ❤️ button or share it.

I warmly welcome all new subscribers to the newsletter this week. I’m happy to have you here and I hope you’ll enjoy my work.

The best way to support the Humanity Redefined newsletter is by becoming a paid subscriber.

If you enjoy and find value in my writing, please hit the like button and share your thoughts in the comments. Additionally, please consider sharing this newsletter with others who might also find it valuable.

For those who prefer to make a one-off donation, you can 'buy me a coffee' via Ko-fi. Every coffee bought is a generous support towards the work put into this newsletter.

Your support, in any form, is deeply appreciated and goes a long way in keeping this newsletter alive and thriving.

🧠 Artificial Intelligence

Meta, IBM and others launch AI Alliance

IBM and Meta, in collaboration with over 50 global organizations have launched the AI Alliance which aims to foster open and transparent innovation in AI, emphasizing the importance of collaboration and information sharing for inclusive and rapid innovation. Its focus is on accelerating responsible AI innovation while addressing safety, trust, diversity, and economic competitiveness. Key objectives include developing benchmarks, standards, tools for AI safety and security, advancing open foundation models, boosting AI hardware ecosystems, supporting global AI skills development, and informing public discourse on AI. The AI Alliance also aims to encourage open AI development in safe and beneficial ways through various initiatives and events. Apart from Meta and IBM, the list of founding members includes companies such as AMD, Intel, Hugging Face, Linux Foundation, and Stability AI, as well as many universities and other organisations. Who you won’t see on the list are Google, Microsoft, OpenAI or Anthropic.

Mistral AI nears $2B valuation — less than 12 months after founding

In June 2023, the French AI startup Mistral AI, founded by former DeepMind and Meta employees, made headlines by securing €105M (around $113.2M) in what was Europe's largest-ever seed round, just weeks after founding the company. Now, the company is on the verge of concluding a fundraising round of approximately €450M ($485M) from investors, poised to increase its valuation to $2B mere months after founding. In contrast to other AI startups that focus on developing increasingly large models, Mistral AI is dedicated to creating smaller, developer-oriented models. Their first model, the open-sourced Mistral 7B, has gained significant popularity among open-source developers and the AI community. When finely tuned, Mistral 7B is capable of competing with much larger models while using fewer resources.

Elon Musk is looking to raise $1 billion for xAI

Elon Musk is seeking to raise $1 billion for his AI company, xAI, having already secured approximately $135 million from undisclosed investors. xAI is developing Grok, an AI bot designed to rival OpenAI's ChatGPT, Google's Bard, and Anthropic's Claude, which is supposed to be released in beta to X Premium+ subscribers soon. Grok aims to differentiate itself by answering more controversial questions and updating with real-time information from X, though it faces the challenge of discerning accurate news from misinformation.

Pika, which is building AI tools to generate and edit videos, raises $55M

Pika Labs is a new player in the generative AI scene, aiming to build AI tools to generate videos, similar to how we can currently generate images with Midjourney or DALL-E. This week, Pika announced a $55M funding round, bringing the total investment in the startup to $110M. “Video is at the heart of entertainment, yet the process of making high-quality videos to date is still complicated and resource-intensive,” Pika writes in a blog post. “When we started Pika six months ago, we wanted to push the boundaries of technology and design a future interface of video making that is effortless and accessible to everyone. Since then, we’re proud to have grown the Pika community to half a million users, who are generating millions of videos per week.”

UK’s CMA is looking at whether Microsoft and OpenAI tie-up is a ‘relevant merger’

The recent events at OpenAI and their resolution raised questions about how much control Microsoft has over OpenAI. The UK's Competition and Markets Authority (CMA) asked the same question and launched an inquiry into whether the deepening ties between Microsoft and OpenAI constitute a "relevant merger situation," potentially impacting market competition. The CMA's investigation begins with an invitation for comments from both companies and third parties. This inquiry follows Microsoft's substantial investment in OpenAI last year, giving it significant influence in the company, and their close collaboration in developing AI services. The CMA is concerned about the potential for these developments to hinder competition in the rapidly evolving AI market and foundation models, particularly given the significant roles Microsoft and OpenAI play in this sector. The investigation will explore various aspects, including the distinctiveness of the businesses, revenue generated through their relationship, and their market share in AI.

Google TPU v5p AI Chip Launches Alongside Gemini

The spotlight this week was on the release of Google Gemini, but it wasn't the only major reveal from Google. Alongside Gemini, the new TPU v5p AI chips were also unveiled. These chips, which have already been used to train Gemini, are reported to offer approximately 2.3 times better performance per dollar compared to the TPU v4, and they can train Large Language Models (LLMs) at a speed 2.8 times faster than their predecessor.

If you're enjoying the insights and perspectives shared in the Humanity Redefined newsletter, why not spread the word?

🤖 Robotics

Agility Robotics: Amazon's humanoid warehouse robots will eventually cost only $3 per hour to operate

Agility Robotics, whose humanoid robot Digit is currently being tested by Amazon, predicts that as production of Digit ramps up, the cost to operate the humanoid robot can decrease from $10 to $12 per hour to $3 per hour. Agility Robotics is one of the leading companies in the field of commercial humanoid robots and is the only company currently offering one for purchase.

Andruil reveals Roadrunner, a jet-powered autonomous combat drone

Anduril, a defence startup founded by Palmer Luckey, the creator of Oculus Rift, recently unveiled their latest turbojet combat drone named Roadrunner. This reusable, vertical take-off and landing (VTOL) autonomous drone is capable of carrying various payloads, including explosives. Anduril is emerging as a prominent company in the defence sector, a topic I explore in depth in my article The Rise of Military AI and the Military-Tech Complex.

Dobb·E: An open-source, general framework for learning household robotic manipulation

Created by researchers from NYU and Meta, Dobb·E is “an affordable yet versatile general-purpose system for learning robotic manipulation within household settings”. Using a demonstration collection tool called The Stick (which is literally just a stick with some 3D-printed parts and an iPhone), a human can show how an action needs to be performed and within 5 minutes, Dobb·E can learn it and then perform that action using a real robot. Researchers behind Dobb·E hope their project will help advance the development of general household robots. The entire software stack, models, data and hardware designs are open-source.

6 robotics trends from iREX 2023

The Robot Report highlights six trends observed at iREX 2023, an annual conference showcasing trends in the global robotics market. This year's key trends include the growth of collaborative robots (cobots), the emergence of zero-teach robotics, and an increased presence of Chinese players in the industry, among others.

🧬 Biotechnology

Tiny robots made from human cells heal damaged tissue

Scientists have developed anthrobots, tiny robots made of human cells capable of repairing damaged neural tissue. These self-assembling robots are a follow-up to xenobots, the first biological robots made from frog cells. The anthrobots demonstrated their therapeutic potential by healing a layer of scratched neural tissue within three days, without any genetic modification. Led by developmental biologist Michael Levin at Tufts University, the research team envisions future applications for anthrobots in medical scenarios like clearing arteries, breaking up mucus, or drug delivery. They also see potential in using biobots, robots made from biological material, in areas like sustainable construction and space exploration.

Want to Store a Message in DNA? That’ll Be $1,000

DNA is often seen as the blueprint for a living being. However, the same mechanism that encodes the diversity of life on Earth can also be used for information storage. With its dense storage capacity and stability over time, DNA presents an attractive alternative to traditional data storage methods. Theoretically, one gram of DNA could hold up to 455 exabytes of information, which is enough to store the entire internet with a lot of space left. This article highlights Biomemory, a French company that is offering wallet-sized cards, each capable of storing one kilobyte of text data using DNA. Priced at $1,000 for two cards, this new technology offers a unique and potentially long-lasting storage solution. Although it is currently expensive and limited in capacity, the technology is expected to become more affordable and efficient as it continues to develop.

‘Treasure trove’ of new CRISPR systems holds promise for genome editing

Researchers have discovered new, rare types of CRISPR systems that could be adapted for genome editing, expanding the known diversity of these systems beyond the six previously identified types (I–VI). Using an algorithm called FLSHclust, the team analyzed millions of genomes and identified around 130,000 genes associated with CRISPR, including 188 never seen before. Among these is a novel system targeting RNA, named type VII. However, it's still uncertain if these systems, including type VII, will be practical for genetic engineering.

Thanks for reading. If you enjoyed this post, please click the ❤️ button or share it.

Humanity Redefined sheds light on the bleeding edge of technology and how advancements in AI, robotics, and biotech can usher in abundance, expand humanity's horizons, and redefine what it means to be human.

A big thank you to my paid subscribers, to my Patrons: whmr, Florian, dux, Eric, Preppikoma and Andrew, and to everyone who supports my work on Ko-Fi. Thank you for the support!