DBRX - the new king of open source LLMs - Weekly News Roundup - Issue #460

Plus: are Rabbit R1 and Humane AI Pin scams?; Anthropic gets $2.75 billion from Amazon; Grok-1.5; robotic police dog got shot multiple times; drugs made in space; and more!

Hello and welcome to the Weekly News Roundup, Issue #460. The large language model space has seen some major reshuffling in the last few weeks. First, Anthropic’s Claude 3 Opus outperformed GPT-4, and now DBRX, a language model from Databricks, has claimed the title of the best open-source large language model.

In other news, Claude 3 Opus dethroned GPT-4 on the Chatbot Arena Leaderboard; Apple is not-so-subtly hinting at AI features to be revealed at WWDC 2024; xAI has announced Grok-1.5, and Anthropic received a $2.75 billion investment from Amazon. Apart from that, this week’s issue includes an open-source robotic cat, a story about a robotic police dog that was shot multiple times and was credited with helping to avoid potential bloodshed, and one company's dream of manufacturing drugs in space.

Enjoy!

The space of open-source large language models has been dominated by various variants of Meta’s Llama 2, Mistral-7B and Mixtral from Mistral, or small models from Microsoft (Phi-2) and Google (Gemma). All these models offer very good performance and, in some cases, are very close to or even surpass proprietary models. But now, we have a new king of open-source large language models, and it doesn’t come from Meta, Mistral, Google, or Microsoft. It comes from Databricks, and its name is DBRX.

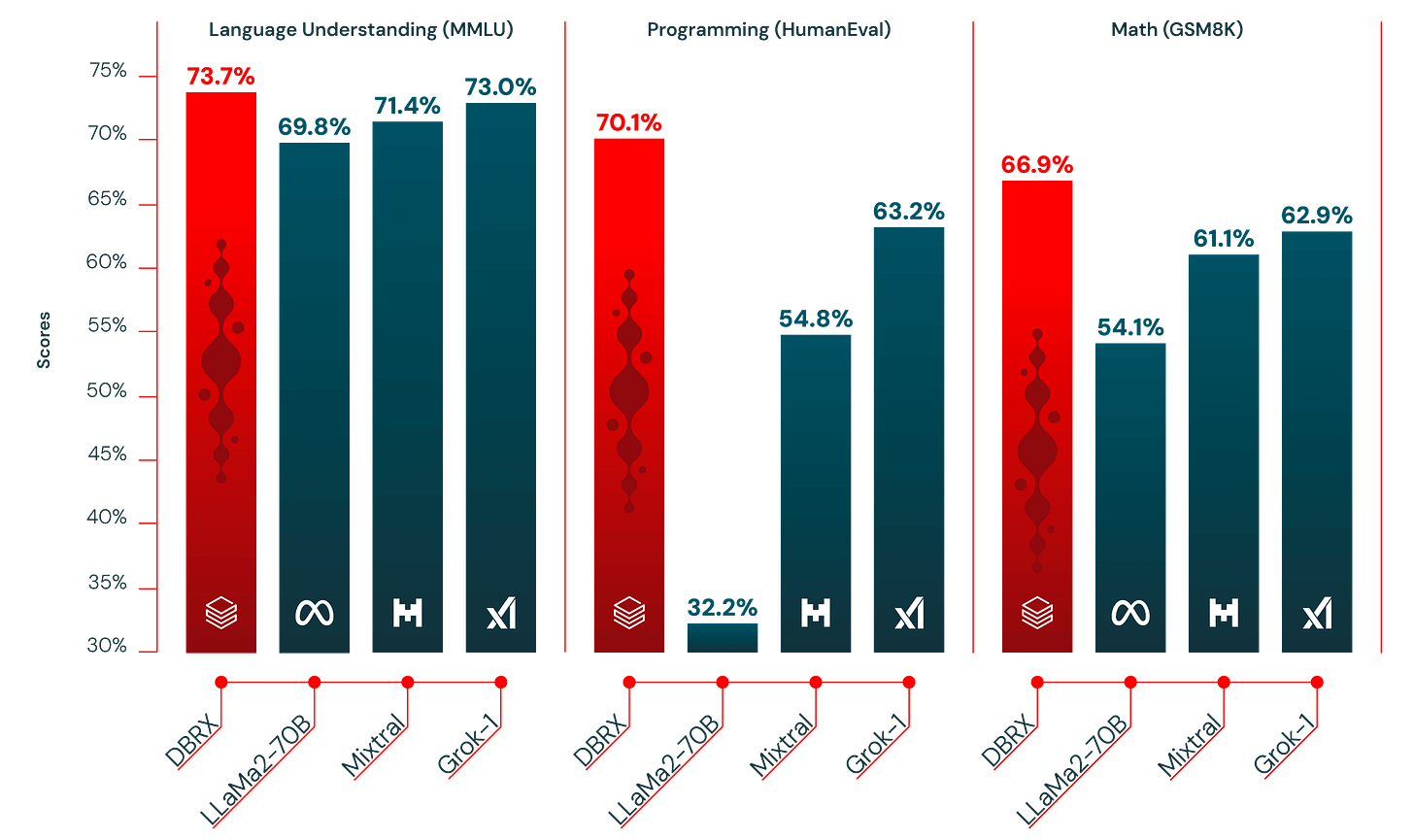

Seemingly out of nowhere, Databrick released DBRX just a couple of days ago and immediately claimed the crown of the best open-source large language model, with the fine-tuned model even being on par with Google’s Gemini 1.0 Pro.

DBRX is a Mixture of Experts (MoE) model with a total of 132B parameters with a 32k context window. DBRX has 16 experts and chooses 4, while Mixtral and Grok-1 have 8 experts and choose 2. According to Databricks, choosing 4 out of 16 experts results in 65x more possible combinations of experts which improves the model’s quality.

In the post announcing DBRX, Databricks boasts some impressive numbers. According to that post, inference is up to 2x faster than LLaMA2-70B while being more efficient to train.

Both Base and Instruct versions of DBRX are available on HuggingFace. DBRX is also available on GitHub. If you don’t want to install DBRX, the model is deployed on HuggingFace so you can try it out yourself.

I’m happy to see the open source community keeping up with, and sometimes even surpassing, proprietary models from big tech companies like Microsoft, Google, and OpenAI. Thanks to the companies that contribute their models to the open-source community and the passionate developers behind them, access to state-of-the-art language models is no longer limited to a handful of big companies. Now, everyone can install tools like Ollama, download a language model, and start experimenting. This democratizes the opportunity for those who don’t have access to a warehouse full of Nvidia H100 GPUs to work with large language models without needing to pay OpenAI, Microsoft, or Google for the privilege of using their LLMs. It’s a positive development for researchers and the overall AI community.

However, despite calling themselves “open source,” DBRX, Mixtral, Llama, and other models are not fully open source. Sure, the code and weights are publicly available, but there is still more required to truly consider these models open. I’d like to see more details on the training process, including the kind of processing power that was involved, how long it took, and what challenges the developers had to overcome. The biggest omission, however, is the training data. We receive a fully-trained model, but we don’t know what it was trained on. There might be a legal reason for not publishing the training data, as it could reveal copyrighted materials used in training these models, potentially exposing the developers to copyright lawsuits. It might be more accurate to use terms like “open weights” or “open code” models instead of “open source,” since the source is not fully open.

If you enjoy this post, please click the ❤️ button or share it.

Do you like my work? Consider becoming a paying subscriber to support it

For those who prefer to make a one-off donation, you can 'buy me a coffee' via Ko-fi. Every coffee bought is a generous support towards the work put into this newsletter.

Your support, in any form, is deeply appreciated and goes a long way in keeping this newsletter alive and thriving.

🦾 More than a human

Exosuit Muscle Control Steps Closer to Reality

Researchers and engineers from South Korea and Switzerland are developing a new type of exosuit muscle control named Synapsuit. The main innovation of this exosuit is the use of an electrostatic clutch, which eliminates the need for continuous muscle stimulation just to maintain joint position, thus making the overall experience more pleasant and less fatiguing. The team expects to produce a functional Synapsuit system by 2026.

Human Artificial Chromosomes with Reduced Multimerization Constructed

Researchers from the University of Pennsylvania and the University of Edinburgh have made progress by constructing single-copy human artificial chromosomes (HACs) to tackle the issue of multimerization, a significant challenge in the development of HACs. This innovation has the potential to enable the insertion of large amounts of engineered DNA into cells, marking a significant step forward in the field.

🧠 Artificial Intelligence

Apple WWDC 2024 set for June 10-14, promises to be ‘A(bsolutely) I(ncredible)’

Apple has announced the dates for WWDC and although Apple hasn’t said it publically, after Greg Joswiak, Apple SVP, tweeted saying it is “going to be Absolutely Incredible”, we can be almost certain the main theme of the event will be AI. Rumours suggest Apple will introduce new AI-powered features to its products and services, including a complete overhaul of Siri, which might now be powered by Google Gemini.

Announcing Grok-1.5

xAI has announced Grok-1.5, an upgraded version of their large language model. According to the announcement, Grok-1.5 has improved reasoning capabilities and a context length of 128,000 tokens. The model will be soon available on X. There is no mention of open-sourcing the model like Grok-1.

Amazon spends $2.75 billion on AI startup Anthropic in its largest venture investment yet

Anthropic, whose Claude 3 large language models have become the best available, received a $2.75 billion investment from Amazon. This investment is the second tranche of the initial funding announced in September last year. At that time, Amazon invested $1.25 billion with an option to invest up to an unspecified amount. This news marks the completion of that deal.

OpenAI is expected to release a 'materially better' GPT-5 for its chatbot mid-year

According to Business Insider, OpenAI is poised to release a successor to GPT-4 by mid-2024. The new model is almost certainly being trained as we speak. However, it remains unclear whether this will be a significant upgrade worthy of the GPT-5 name, or a more incremental, GPT-4.5 update, with a more substantial upgrade planned for later.

“The king is dead”—Claude 3 surpasses GPT-4 on Chatbot Arena for the first time

Well, it finally happened. OpenAI's GPT-4 is no longer at the top of the Chatbot Arena Leaderboard, a position various versions of GPT-4 occupied since the leaderboard's launch in May 2023. The new leader is now Anthropic's Claude 3 Opus.

▶️ Humane AI Pin and Rabbit R1: What Are These Companies Hiding? (13:54)

A couple of months ago, two new devices, Rabbit R1 and Humane AI Pin, entered the stage and grabbed everyone’s attention. These AI-first devices promise to create a new category of devices and redefine how we interact with computers and AI assistants. But is this hype, which led to over 100,000 pre-orders for Rabbit R1, justified? Are we just getting devices that are just a gimmick trying to ride the AI wave? This video from Dave2D perfectly expresses the concerns about these devices and their problems.

▶️ Making AI accessible with Andrej Karpathy and Stephanie Zhan (36:58)

In this interview, Andrej Karpathy, one of the researchers behind the original deep learning paper and a well-respected AI researcher, shares his thoughts on AGI, the state of the AI industry, the importance of building a more open and vibrant AI ecosystem and how we can make building things with AI more accessible.

If you're enjoying the insights and perspectives shared in the Humanity Redefined newsletter, why not spread the word?

🤖 Robotics

Boston Dynamics Unleashes New Spot Variant for Research

Boston Dynamics is planning to open Spot for robotics researchers. The Spot RL Researcher Kit will offer much finer control over the robot, allowing for capabilities such as making Spot run much faster than what has been publicly shown. The Spot RL Researcher Kit will include a joint-level control API, an Nvidia Jetson AGX Orin payload, and a simulation environment for Spot based on Nvidia Isaac Lab. The kit is set to be officially released later this year.

Robotic police dog shot multiple times, credited with avoiding potential bloodshed

A police robotic dog named Roscoe is believed to be the first Spot model to have been shot. The robot dog, along with two other robots typically used for bomb disposal, participated in an incident in Massachusetts involving a person barricaded in a home, aiding in the arrest of the suspect without any casualties.

If you're interested in building your own four-legged robotic pet, OpenCat could be the perfect project for you. OpenCat is an open-source Arduino and Raspberry Pi-based quadruped robot designed to be an educational and research tool. Since its launch in 2016, OpenCat has come a long way, fostering a community of makers who have expanded upon the original concept and contributed to the project. This includes designing open-source models for 3D printing, among other improvements.

Using drone swarms to fight forest fires

Researchers from India are exploring the use of drone swarms to fight forest fires. The idea is for a swarm of drones to patrol an area for signs of fire. Once a fire is spotted, the drone closest to the fire becomes the centre to which other drones are attracted. Each drone has the autonomy to calculate the fire's size and potential spread, and to decide how many drones are needed to extinguish the fire. Such drone swarms can also be useful during other natural disasters, such as floods and earthquakes, to locate survivors, deliver water, food, and medicine, and enhance communication.

🧬 Biotechnology

The Next Generation of Cancer Drugs Will Be Made in Space

There is a group of promising drugs that could be used in immunotherapy, but they cannot crystallize properly due to gravity. However, as the space industry begins to take off, some companies, like BioOrbit featured in this article, are exploring the possibility of establishing drug or material manufacturing facilities in low-Earth orbit.

Thanks for reading. If you enjoyed this post, please click the ❤️ button or share it.

Humanity Redefined sheds light on the bleeding edge of technology and how advancements in AI, robotics, and biotech can usher in abundance, expand humanity's horizons, and redefine what it means to be human.

A big thank you to my paid subscribers, to my Patrons: whmr, Florian, dux, Eric, Preppikoma and Andrew, and to everyone who supports my work on Ko-Fi. Thank you for the support!

My DMs are open to all subscribers. Feel free to drop me a message, share feedback, or just say "hi!"

Great article throughout. Especially liked the Dave2D exposé around RabbitR1 and HumanityAi.