Meta Let the Llama 4 Out - Sync #513

Plus: OpenAI closes $40 billion funding round; AI 2027 Project; Figure shows their humanoid robots at work at BMW; DeepMind spinout Isomorphic Labs raises $600M; a teardown of a robot-dog; and more!

Hello and welcome to Sync #513!

This week, Meta surprised everyone by releasing the long-awaited Llama 4 models, which we will take a closer look at in this week’s issue of Sync.

Elsewhere in AI, OpenAI closed a $40 billion funding round, while DeepMind spinout Isomorphic Labs raised $600 million. Additionally, OpenAI makes image generation in ChatGPT available for everyone, delays GPT-5 and announced it will release o3 and a new open model.

Over in robotics, Figure gives an update on the ongoing trials of their humanoid robots working at BMW’s car factory. We also have iFixit disassembling a robot dog, how robots are transforming the dairy industry, and a liquid robot.

Additionally, a Chinese scientist ostracised over gene-edited babies seeks a comeback, a paralysed man stands again after receiving reprogrammed stem cells, and we explore how to turn our bodies into computers.

I hope you’ll enjoy this week’s issue of Sync!

Meta Let the Llama 4 Out

When the AI race began in late 2022, no one thought that, of all the big tech companies, Meta would become the leader in the open models space. But with the release of the Llama family of models, Meta unexpectedly became the unlikely champion of open-weight large language models, offering powerful models that developers and researchers could actually use, study, and build upon without being locked behind proprietary APIs.

Then came DeepSeek.

In rapid succession, the Chinese lab released DeepSeek V3 and DeepSeek R1—open models that not only matched Llama 3’s performance but surpassed it on reasoning, coding, and cost-efficiency. Meta suddenly found itself playing catch-up in a race it helped define.

Now, with Llama 4, Meta is making its play to reclaim the crown. Released quietly on a Saturday, this new lineup of models pushes forward on every front: performance, efficiency, scale, and multimodality. Llama 4 is not only a leap in capability, it's a sign that Meta is serious about staying at the forefront of open AI.

The New Llama Lineup

Similar to the previous generation, Llama 4 arrives not as a single model but as a collection of three models, named Scout, Maverick and Behemoth.

All three models adopt a Mixture-of-Experts (MoE) design. Instead of one giant model, Llama 4 models are split into a collection of smaller, specialised sub-models— or “experts”—each trained to excel in specific areas such as coding, mathematics, or writing. This structure dramatically improves efficiency without sacrificing capability, as famously demonstrated by DeepSeek R1, where each prompt only activates a small subset of the model’s parameters.

Llama 4 is also natively multimodal. Instead of tacking on vision capabilities, it uses early fusion—feeding both visual and textual tokens through the same transformer layers—enabling deep integration between images and language from the outset.

Before we meet the Llama 4 lineup, it is worth noting that the presented benchmark results are based on Meta’s internal testing. They’re useful indicators, but without a fully independent evaluation, it’s hard to know how Llama 4 truly stacks up against its competitors in day-to-day tasks. Until more community benchmarking is done, it’s best to take those numbers with a grain of salt.

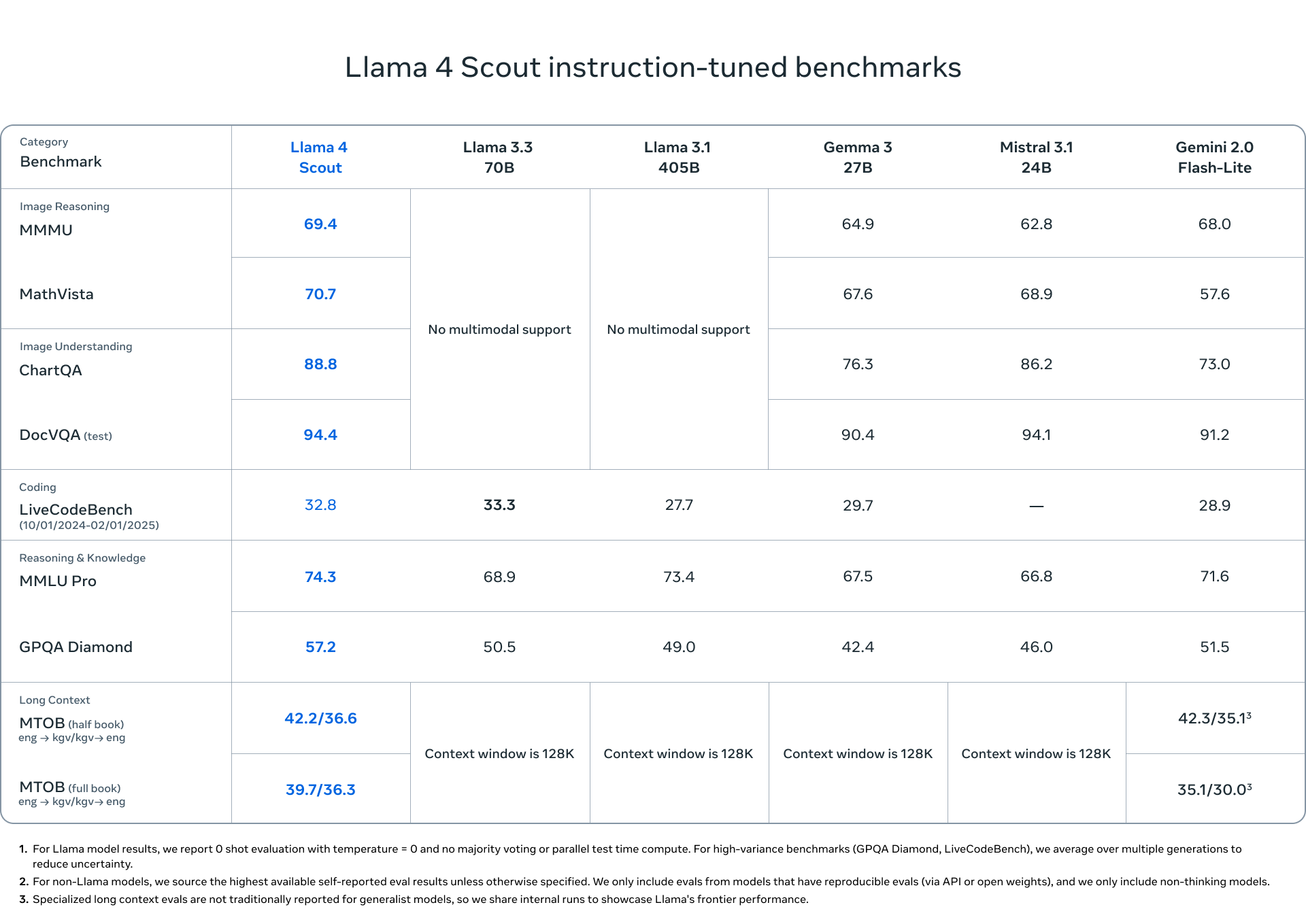

Scout is the smallest and most efficient model in the lineup, with 17 billion active parameters and a total of 109 billion, distributed across 16 experts. Its standout feature is a 10 million token context window—the largest in any public model—offering the possibility of full-book ingestion, massive codebase analysis, or extended memory in long-running chats.

According to Meta’s benchmarks, Scout outperforms Gemini 2.0 Flash-Lite, Gemma 3, and Mistral 3.1 in coding, long-context reasoning, and image understanding tasks.

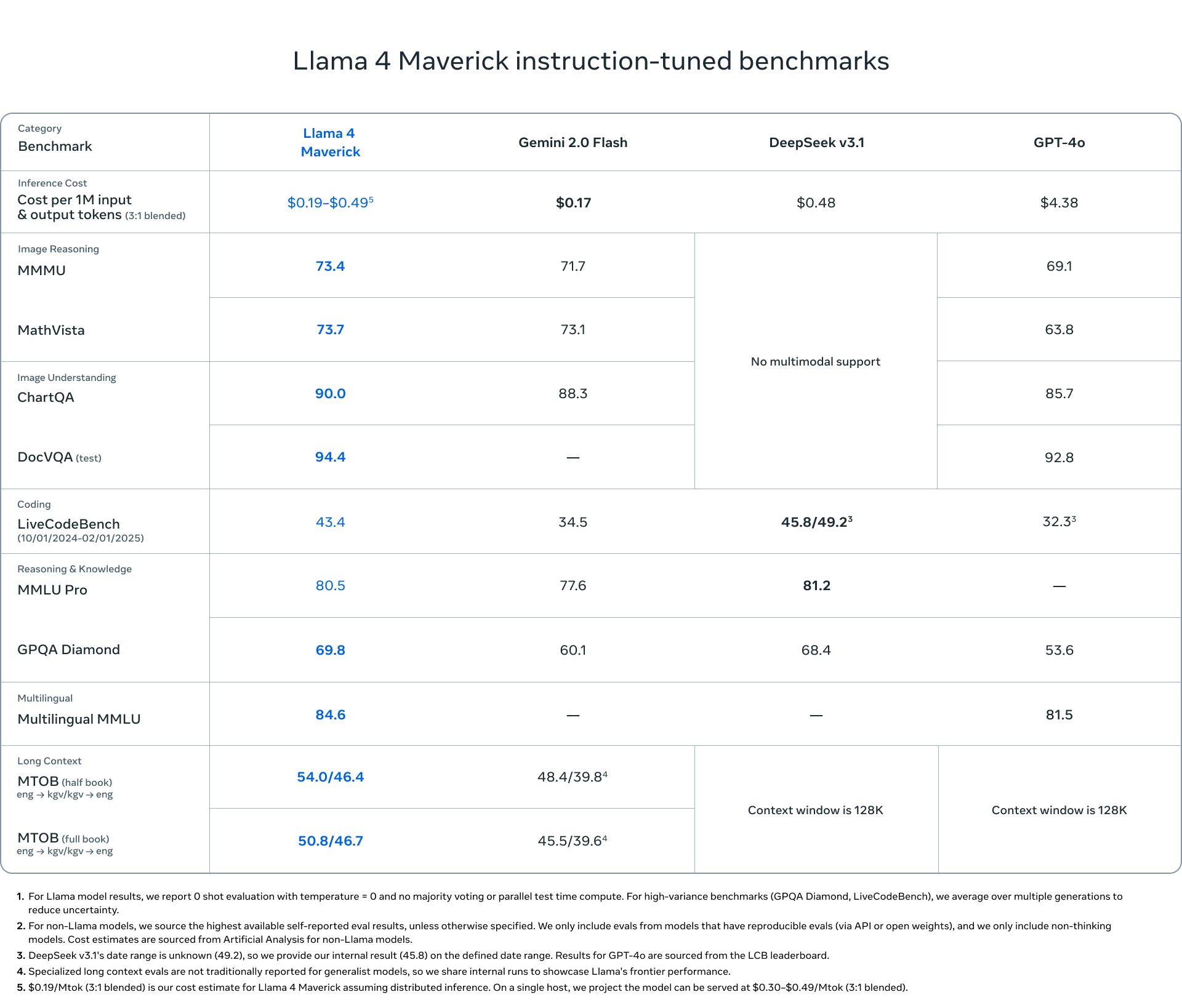

Maverick keeps the same 17 billion active parameter count as Scout but scales up dramatically under the hood with 128 experts and 400 billion total parameters.

Maverick is optimised for assistant-style tasks—fluent conversation, creative writing, and visual reasoning—while maintaining top-tier performance across reasoning and coding challenges.

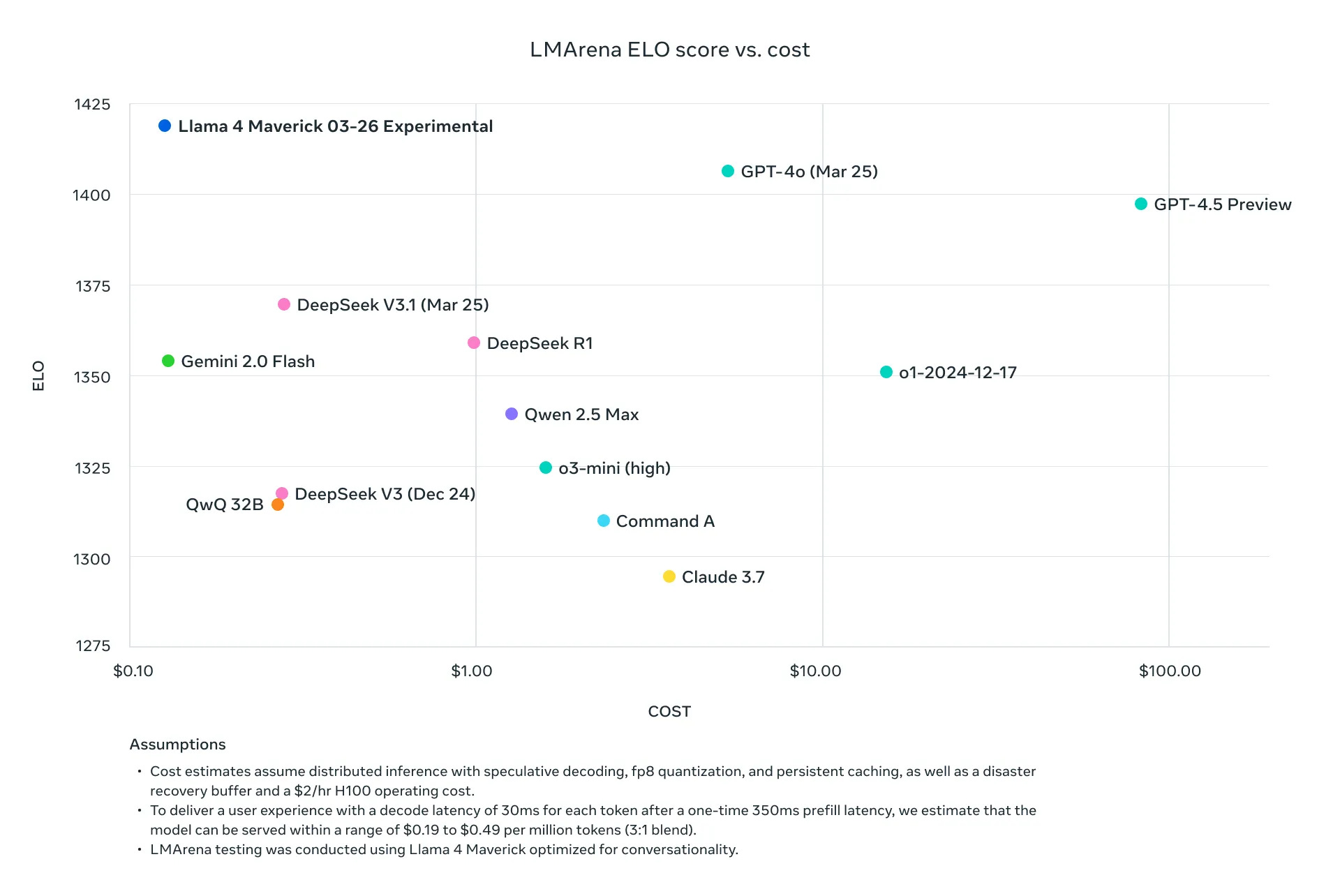

According to Meta, Maverick beats GPT-4o, Gemini 2.0 Flash, and DeepSeek V3. An experimental chat version scored a respectable 1417 on the LMArena benchmark, putting it as the best open model on the leaderboard and second only to Gemini 2.5 Pro.

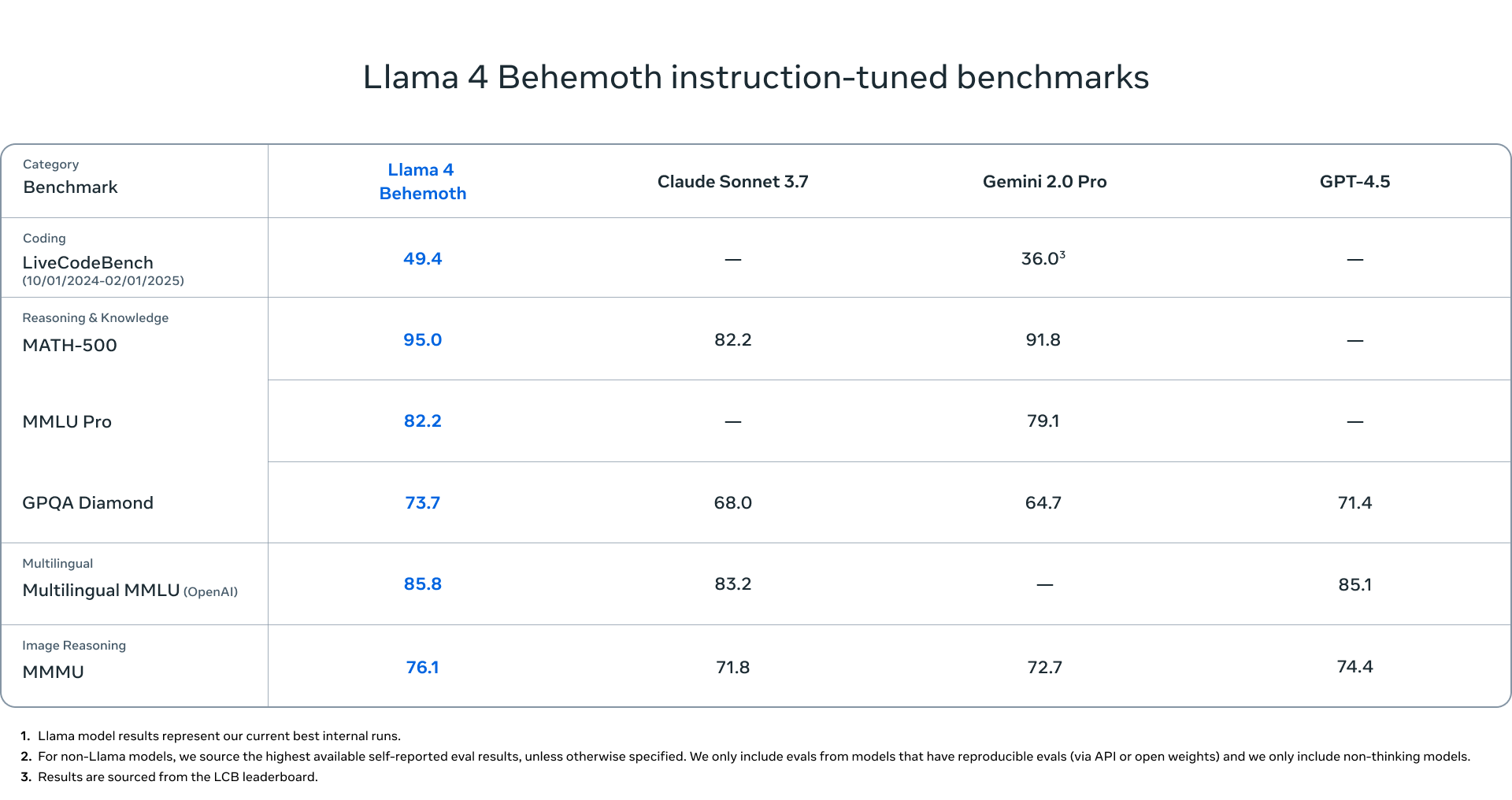

And last but not least, there’s Behemoth. With 288 billion active parameters, 16 experts and nearly 2 trillion parameters in total, it’s among the largest models ever built. Behemoth hasn’t been released yet, but Meta already used it to distil both Scout and Maverick, transferring its knowledge into lighter, more efficient models. Even while still in training, it reportedly outperforms GPT-4.5, Claude 3.7 Sonnet, and Gemini 2.0 Pro on STEM benchmarks like MATH-500 and GPQA Diamond as well as on multilingual and image reasoning benchmarks.

Llama 4, with Strings Attached

Llama 4 looks impressive on paper—and in many ways, it is. But there are important catches worth considering.

Firstly, the hardware requirements are steep.

Previous Llama generations included smaller models—1B, 3B, 8B, and 70B parameters—that could run on modest hardware, including personal laptops and even Raspberry Pi. With Llama 4, the minimum hardware requirements have risen sharply if you want to use these models locally.

Even the smallest model, Scout, requires at least 64GB of GPU memory. For perspective, a 14-inch MacBook Pro with an M4 Max chip and at least 64GB of unified memory costs around $4,000 or more. A Mac Studio with similar specs ranges from $3,000 to over $4,000. Nvidia DGX Spark, Nvidia’s upcoming desktop AI computer with a price tag of $3,000, will also be able to run Llama 4 Scout.

To run Scout, Meta recommends an Nvidia H100 GPU, a data centre-grade card with 80GB of memory and a price tag in the thousands of dollars (assuming you can get one). For Maverick, the recommendation is a full Nvidia DGX H100 system—an even more expensive machine with eight H100s and 640GB of GPU memory, far beyond what most individuals or small teams can access.

Secondly, Llama 4 models do not have built-in reasoning yet.

While Scout and Maverick perform well on benchmarks, none of the Llama 4 models currently exhibit reasoning behaviour. They do not reflect and question themselves before responding like OpenAI’s o-series or DeepSeek R1 do. They generate outputs in the traditional autoregressive style without internal reflection. That said, given how much Meta appears to have been influenced by DeepSeek, it’s likely this capability (or something like it) is already in the works, with the upcoming LlamaCon on April 29 being a good opportunity to introduce reasoning capabilities.

While Meta released Llama 4 Scout and Maverick as open weights, it is worth noting that their use is restricted in key ways:

Developers and companies based in the EU are explicitly barred from using or distributing the models, likely due to strict AI and privacy laws.

Companies with over 700 million monthly active users must request a special license, which Meta can approve or deny at its discretion.

One interesting thing Meta mentioned in the Llama 4 announcement is how the new models handle politically sensitive or controversial topics. Previous versions, particularly Llama 3, were often criticised for refusing to answer questions on divisive issues or leaning visibly toward one side of the political spectrum. In Llama 4, Meta has made a clear effort to address this.

According to Meta’s own evaluations, refusal rates on debated political and social topics dropped from 7% in Llama 3.3 to under 2%. Meta also reports that Llama 4 models are now more balanced in which prompts it refuses—with unequal refusals reduced to under 1% on internal tests. Llama 4 is also reported to respond with strong political lean at half the rate of its predecessor, and now performs comparably to xAI’s Grok on political bias tests.

Those tweaks come as some White House allies accuse AI chatbots of being too politically “woke.”

With Llama 4, Meta has successfully reclaimed the crown for open-weight models. These are undeniably impressive systems—and they’re available for anyone to use (assuming you have the hardware to run them).

That said, it’s hard not to feel that Meta released Llama 4 as soon as it was ready in an unfinished state—in part to justify its plans to spend $65 billion on AI this year and to show some good results to investors while getting positive press. Llama 4 lineup, however impressive it is, feels incomplete—there is no reasoning or agentic capabilities, and the hardware requirement puts them beyond the reach of many individuals. I hope those shortcomings will be addressed soon.

Both Llama 4 Scout and Maverick are available to download on Llama.com and Hugging Face. Additionally, Meta says Llama 4 is powering Meta AI in WhatsApp, Messenger and Instagram Direct. Llama 4 is also available on the Meta AI website.

If you enjoy this post, please click the ❤️ button or share it.

Do you like my work? Consider becoming a paying subscriber to support it

For those who prefer to make a one-off donation, you can 'buy me a coffee' via Ko-fi. Every coffee bought is a generous support towards the work put into this newsletter.

Your support, in any form, is deeply appreciated and goes a long way in keeping this newsletter alive and thriving.

🦾 More than a human

Chinese Scientist Ostracized Over Gene-Edited Babies Seeks Comeback

In 2018, Chinese scientist He Jiankui made headlines for creating the first gene-edited babies—a move that brought global outrage and notoriety. Now, he's attempting a comeback with a new project aimed at using gene editing to prevent Alzheimer’s disease. He plans to conduct animal studies in the US and eventually human trials in South Africa, where local law would make that possible. Despite serving prison time for unethical research and facing widespread scientific condemnation, He remains defiant, calling ethics a barrier to progress and drawing fresh criticism for secrecy and potential risks to future generations.

Neuralink Recruits Globally for Research on Brain Implants

Neuralink, Elon Musk’s brain implant company, is recruiting patients worldwide—specifically individuals with quadriplegia—to join a clinical trial testing its brain-computer interface as part of Neuralink’s PRIME Study. The device, already implanted in three patients, aims to help people with spinal cord injuries or ALS control computers using only their thoughts.

Paralysed man stands again after receiving ‘reprogrammed’ stem cells

A paralysed man was able to stand on his own after receiving an injection of reprogrammed neural stem cells into his spinal cord. The therapy was part of a groundbreaking stem cell trial in Japan, which involved four men—two of whom showed significant improvements in mobility, while the other two did not. The treatment appears safe, with no major side effects reported after a year. Although the results are promising, researchers stress that larger trials are needed to confirm the therapy’s effectiveness.

Scientists Just Transplanted a Pig Liver Into a Person for the First Time

Chinese researchers successfully transplanted a genetically modified pig liver into a brain-dead human, marking the first time such an organ has functioned inside a human body. The liver, engineered to reduce immune rejection, began producing bile within hours and continued working for 10 days without signs of rejection or inflammation. These results suggest that genetically modified pig organs could serve as a potential stopgap measure for patients awaiting a human organ.

Humans as hardware: Computing with biological tissue

What if your own body could power the next wave of computing? Researchers at the University of Osaka have shown that human muscle tissue can process information like a computer using a method called reservoir computing—a technique that uses a complex system (a "reservoir") to encode data patterns, which a neural network then interprets. Living tissue, with its complexity and memory-like properties, can act as such a reservoir. By capturing ultrasound images of muscle movements, the team created a "biophysical reservoir" that solved complex equations more accurately than traditional methods. This breakthrough study could lead to wearable devices that tap into our own tissue for computational power.

🧠 Artificial Intelligence

OpenAI closes $40 billion funding round, largest private tech deal on record

OpenAI has closed a record-breaking $40 billion funding round, valuing the company at $300 billion and making it one of the world’s most valuable private tech firms. Led by SoftBank with $30 billion and supported by investors such as Microsoft, Coatue, Thrive, and Altimeter, the funding will boost AI research and infrastructure, with $18 billion allocated to the Stargate project. However, the investment is contingent on OpenAI restructuring into a for-profit entity by year-end.

Taking a responsible path to AGI

In this blog post, Google DeepMind outlines its approach to safely developing Artificial General Intelligence (AGI), emphasising proactive risk management, transparency, and global collaboration. As the team at DeepMind writes, AGI is expected to match or surpass human cognitive abilities and could revolutionise fields such as healthcare, education, and innovation. However, the company highlights the need to address key risks such as misuse and misalignment. DeepMind’s new safety framework details technical safeguards, oversight systems, and partnerships with non-profits, industry, and policymakers to ensure AGI is developed responsibly and benefits society at large. The full, 145-page paper explaining DeepMind’s approach to developing safe and secure AGI is available here.

OpenAI says it’ll release o3 after all, delays GPT-5

OpenAI has reversed its earlier decision to cancel the release of its o3 reasoning model and now plans to launch both o3 and its successor, o4-mini, within weeks. Sam Altman announced that the delay and course change are tied to the upcoming GPT-5, which will be more powerful than initially expected but will launch later than planned.

OpenAI plans to release a new ‘open’ AI language model in the coming months

Some time ago, in a Reddit AMA, Sam Altman admitted that OpenAI was “on the wrong side of history” when it comes to releasing open models. Now, it seems the company is following through and is planning to release its first open-weight language model since GPT-2. According to Altman’s post on X, this open model will feature reasoning capabilities and will be evaluated using OpenAI’s Preparedness Framework before release. OpenAI invites developers and researchers interested in this model to contribute via a feedback form on its website. The company will also host global developer events to gather input and demo prototypes. The release date has not been announced, but it should be released sometime this year.

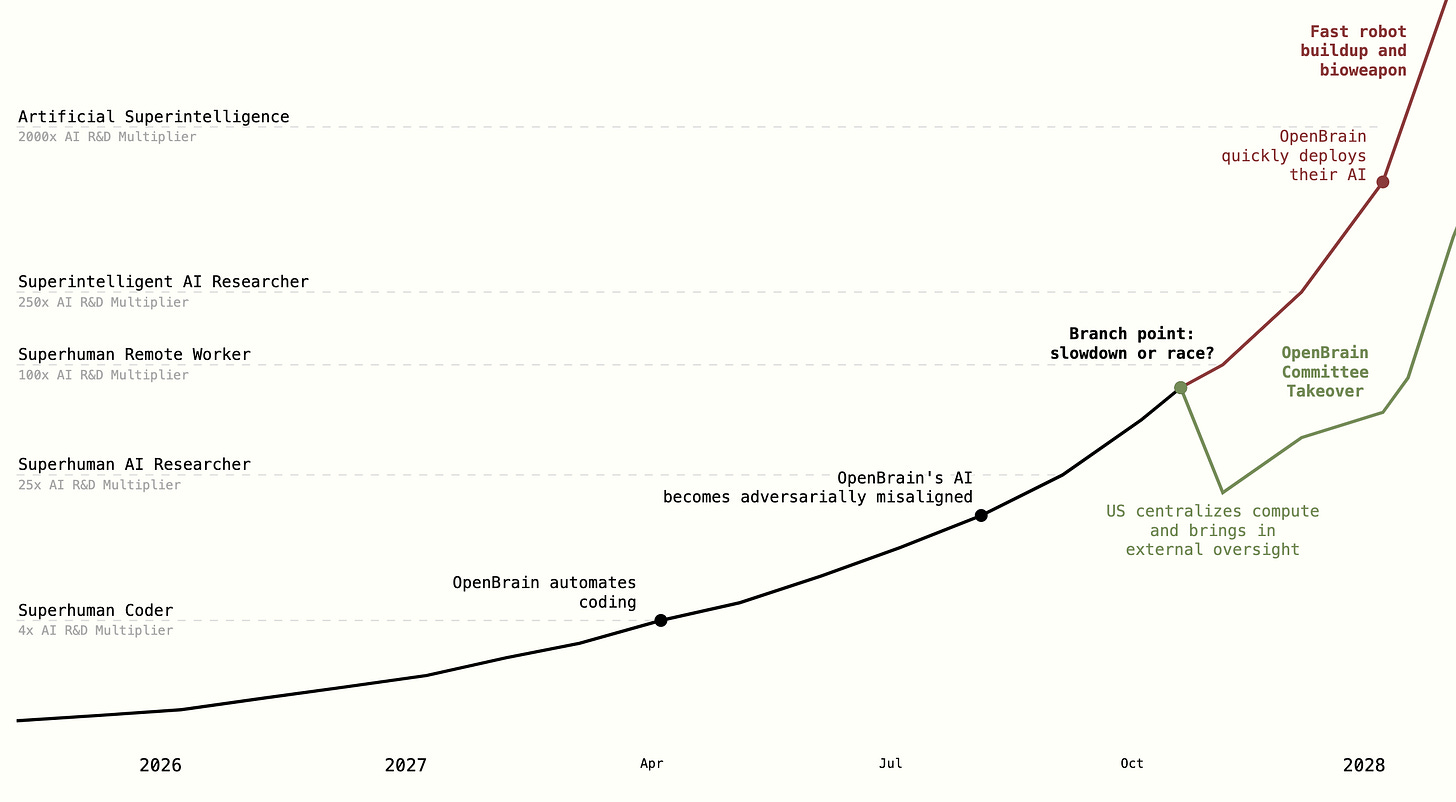

Many experts predict that we are just a few years away from artificial general intelligence (AGI). The AI 2027 project, released by the non-profit AI Futures Project, presents a detailed scenario of how AI could rapidly progress from useful tools to superintelligent systems by 2027—potentially reshaping geopolitics, society, and the fate of humanity. Created by a highly credible team of researchers and former OpenAI staff, the project explores both utopian and catastrophic outcomes, highlighting the urgent need for responsible governance and international cooperation. Definitely worth reading. If you want to learn more about AI 2027, Dwarkesh Patel interviewed some of the people from its team.

Introducing Runway Gen-4

Runway has unveiled Gen-4, its latest AI video generation model geared towards filmmakers, capable of producing highly consistent characters, locations, and objects across scenes. Additionally, the model supports dynamic video creation with realistic motion and coherent environments. This launch follows a $308 million Series D funding round led by General Atlantic, bringing the company’s total funding to over $536 million.

Introducing TxGemma: Open models to improve therapeutics development

Google has released TxGemma, a suite of open language models designed to accelerate therapeutic development by improving prediction and analysis throughout the drug discovery process. Built on the lightweight Gemma model family, TxGemma offers specialised versions for classification, regression, and generative tasks. TxGemma is available in three sizes—2B, 9B and 27B—on Vertex AI Model Garden and Hugging Face.

Apple reportedly wants to ‘replicate’ your doctor next year with new Project Mulberry

According to a report from Mark Gurman, Apple is gearing up to launch a major overhaul of its Health app in iOS 19.4, which will introduce an AI-powered health coach that delivers personalised wellness advice based on user data. Dubbed Project Mulberry, the initiative includes features such as food tracking, workout feedback via iPhone cameras, and health explainer videos produced in Apple’s new Oakland facility. Expected to be released in spring or summer next year, the new AI health coach could be branded as 'Health+' and integrated into Apple One.

‘Matrix’ Co-Creator & Hundreds Of Hollywood A-Listers Want To Stop AI Obliterating Copyright Laws

Over 400 Hollywood artists have signed an open letter to the Trump administration urging it not to weaken US copyright protections in favour of AI companies like OpenAI and Google. The letter warns that proposals from these tech giants—seeking to train AI systems on copyrighted materials without permission—would undermine the livelihoods of artists and jeopardise America’s creative industries. While OpenAI and Google argue that relaxed copyright rules are essential for maintaining US leadership in AI, the signatories counter that innovation must not come at the cost of creators’ rights. The full letter and the list of signatories is in the linked article.

OpenAI’s new image generator is now available to all users

OpenAI’s latest image generator, the one that sparked controversy with the “Ghiblify all the things” trend (which I discussed in the last week’s issue of Sync), is now available to all users, both paid and free, Sam Altman announced in a post on X. There is no concrete information about the limit for free users (the ChatGPT pricing page simply states “limited” without further details), but Altman hinted in another post at a limit of three generations per day. According to Brad Lightcap, COO at OpenAI, ChatGPT users have generated over 700 million images.

GPT-4o draws itself as a consistent type of guy

One of the more interesting things you can do with GPT-4o's new image generation feature is to ask it to draw what it “thinks” something looks like. In this post,

▶️ Jim Keller's Big Quiet Box of AI (30:30)

In case you don’t know who Jim Keller is, he is regarded as one of the best chip designers, having designed AMD’s Zen architecture and worked with Intel, Apple, and Tesla. He now works with Tenstorrent—an AI chip startup. In this video, Dr Ian Cutress from TechTechPotato visits Tenstorrent’s office to check their second-generation AI development kits powered by the new Wormhole chips. He takes a close look at the Quiet Box—a liquid-cooled system equipped with eight of Tenstorrent’s Wormhole AI chips, which should be an AI computing beast. Additionally, Ian speaks with lead engineers about their open-source software stack and hardware architecture, and shares Tenstorrent’s future plans.

If you're enjoying the insights and perspectives shared in the Humanity Redefined newsletter, why not spread the word?

🤖 Robotics

▶️ BMW Deployment Update (1:23)

In this video, Figure, one of the leading humanoid robotics companies, presents how their humanoid robots are being used at the BMW factory in South Carolina, where the robot takes a car part and puts it in the correct place. That is the first half of the video. The remainder consists of shots of traditional robots assembling a car. Figure 02 humanoid robots are undergoing tests by BMW, which the carmaker deemed successful as of November 2024.

Hyundai to buy ‘tens of thousands’ of Boston Dynamics robots

Hyundai Motor Group has announced plans to purchase tens of thousands of robots from Boston Dynamics and integrate its manufacturing capabilities to support Boston Dynamics' growth. The South Korean automaker is already using Spot robots for factory inspections and plans to deploy the new electric Atlas humanoid robots in 2025. This move aligns with Hyundai’s $21 billion US investment strategy and broader ambitions in robotics and AI. Hyundai Motor Group acquired Boston Dynamics in 2021.

▶️ Tearing Down the Unitree Go2: A Robotics Expert's Deep Dive (31:42)

iFixit, famous for its teardown videos of popular consumer electronic devices from iPhones to laptops, has gotten its hands on Go2, a four-legged robot from the Chinese robotics company Unitree. In this video, the robot is being disassembled, allowing us to peek inside and see how Unitree assembled it. Additionally, a robotics expert doing the teardown explains the choices Unitree made in designing and building the robot. Because it is an iFixit teardown, Go2 has also been reviewed from a repairability point of view.

Amazon resumes drone deliveries after two-month pause

Amazon has resumed its Prime Air drone deliveries in College Station, Texas, and Tolleson, Arizona, after a months-long pause caused by a software update to fix an altitude sensor issue linked to dust interference. Although no safety incidents occurred, operations were halted as a precaution and only resumed after FAA approval. Demand for the service has surged since its return, and Amazon continues developing its quieter, weather-resistant MK30 drone despite several recent test crashes. The company aims to deliver 500 million packages annually by drone by the end of the decade and is eyeing future international expansion.

How Dairy Robots Are Changing Work for Cows (and Farmers)

A growing number of dairy farms are turning to automation, with companies like Lely leading the way in deploying robots that milk, feed, clean, and monitor cows. These autonomous systems not only reduce labour and boost milk production by allowing cows to set their own milking schedules, but also improve animal welfare and give farmers more flexibility and free time. Though the initial costs are high, many farmers see long-term benefits in efficiency, sustainability, and lifestyle improvements.

"Flying Batteries" Could Help Microdrones Take Off

Researchers have developed a "flying batteries" system that could significantly extend the flight time of tiny microbots. By combining lightweight solid-state batteries with a novel circuit that dynamically switches battery cells between parallel and serial connections, the system delivers high-voltage power without bulky components. This design enables efficient charging and even recovers energy after actuation. This innovation is a major step towards practical, long-lasting microbots for use in search-and-rescue and hazardous environments.

Liquid robot can transform, separate and fuse like living cells

Researchers from South Korea have developed a soft robot made of liquid that can deform, divide, fuse and capture foreign substances. The robot, encased in dense hydrophobic particles and controlled via ultrasound, mimics the flexibility of biological cells—it can withstand compression and impacts, and then return to its original shape like a droplet. And if your first thought on hearing the words “liquid robot” was T-1000 from Terminator 2, then I’m happy to report that the researchers thought of it too and recreated, with their robot, the famous scene where T-1000 passes through bars (although instead of a humanoid robot, they had a blob). Researchers see their liquid robot being used in biomedical applications such as targeted drug delivery, as well as in industrial settings involving complex or hazardous environments.

🧬 Biotechnology

DeepMind Spinout Isomorphic Labs Raises $600M Toward AI Drug Design

Isomorphic Labs, an AI-driven drug discovery company spun out of Google DeepMind, has raised $600 million in its first external funding round. The funds will accelerate the development of its next-generation AI drug design engine, expand clinical programmes, and support the growth of its team. The company is advancing drug discovery in areas such as oncology and immunology, and has major collaborations with Eli Lilly and Novartis.

First hormone-free male birth control pill clears another milestone

Researchers have developed YCT-529—the first hormone-free, oral male contraceptive to enter clinical trials. Proven 99% effective in mice and successful in primates with no side effects, the drug temporarily halts sperm production—once the drug is no longer taken, fertility returns. Following a successful Phase 1 trial in 2024, YCT-529 is now undergoing further safety and efficacy testing in a second clinical trial.

Thanks for reading. If you enjoyed this post, please click the ❤️ button or share it.

Humanity Redefined sheds light on the bleeding edge of technology and how advancements in AI, robotics, and biotech can usher in abundance, expand humanity's horizons, and redefine what it means to be human.

A big thank you to my paid subscribers, to my Patrons: whmr, Florian, dux, Eric, Preppikoma and Andrew, and to everyone who supports my work on Ko-Fi. Thank you for the support!

My DMs are open to all subscribers. Feel free to drop me a message, share feedback, or just say "hi!"