o3—the new state-of-the-art reasoning model - Sync #498

Plus: Nvidia's new tiny AI supercomputer; Veo 2 and Imagen 3; Google and Microsoft release reasoning models; Waymo to begin testing in Tokyo; Apptronik partners with DeepMind; and more!

Hello and welcome to Sync #498!

This week, OpenAI concluded its 12 Days of OpenAI with the release of o3, OpenAI’s new reasoning model that shattered all benchmarks and redefined what it means to be a state-of-the-art AI model.

Elsewhere in AI, Microsoft and Google have introduced their own reasoning models—Phi-4 and Gemini 2.0 Flash Thinking Experimental, respectively. Google also unveiled the new version of its video generator, Veo 2, and its image generator, Imagen 3. Nvidia joined the release frenzy with the Jetson Orin Nano Super, a compact AI computer designed for edge and robotics applications.

Speaking of robotics, Apptronik announced a partnership with Google DeepMind, while Waymo is heading to Tokyo. We also have a robot dragonfly built by the CIA in the 1970s and a robot that fooled rats it is one of them.

In other news, a third person has received a gene-edited pig kidney, and we’ll learn how Strandbeest are built and how they evolve into becoming something more than a kinematic sculture.

Enjoy!

o3—the new state-of-the-art reasoning model

12 Days of OpenAI started strong with the release of the full o1 model, OpenAI’s reasoning model. And OpenAI’s Shipmas concluded even stronger with o3—the new state-of-the-art reasoning model.

When the full o1 model was released on the first day of 12 Days of OpenAI, I think some people were expecting more than what had been demonstrated by the o1-preview. While the full o1 might have been disappointing, o3, the newest model in OpenAI’s line of reasoning models, not only delivered but also went the extra mile.

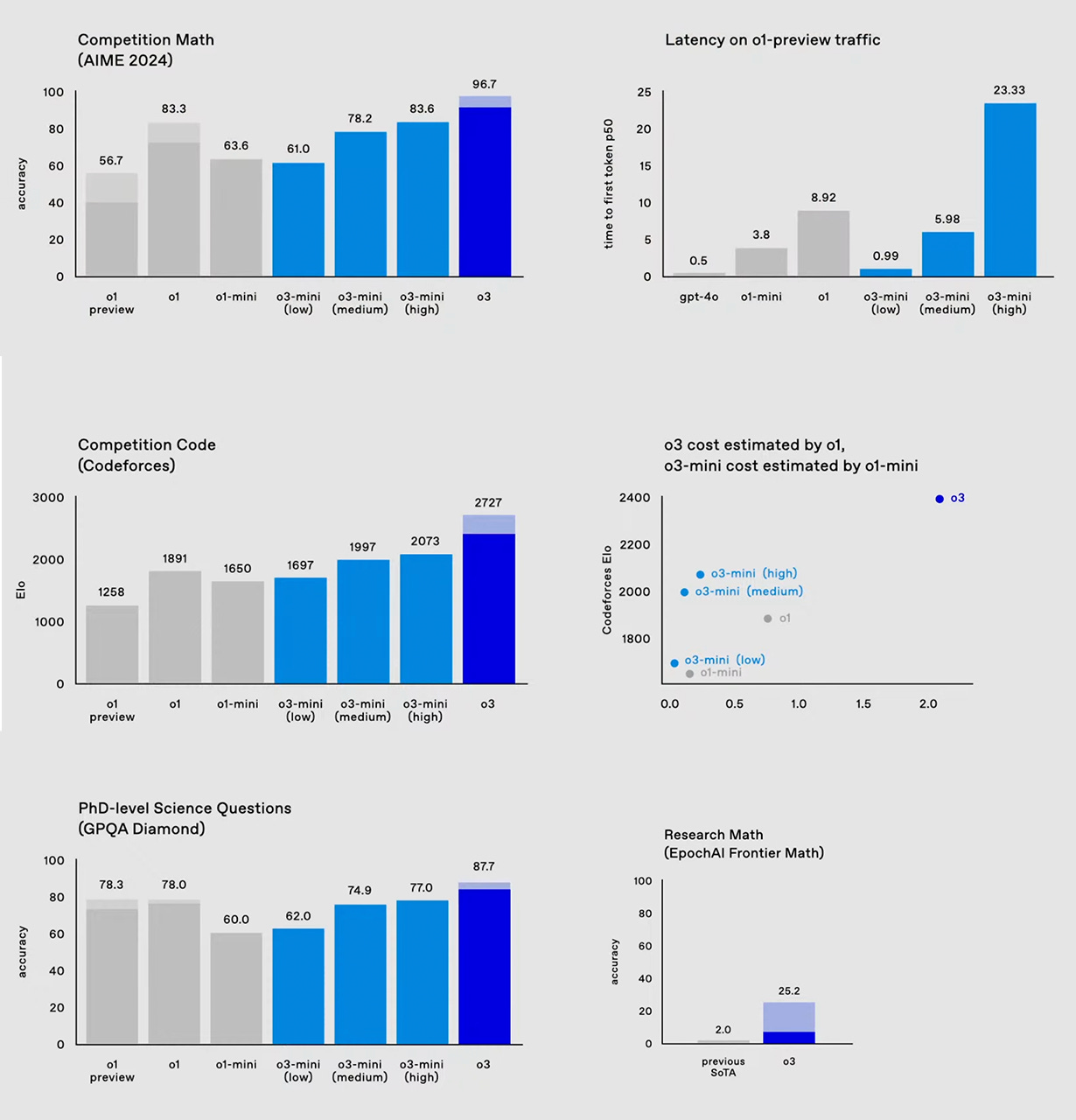

Without further ado, below are the benchmark results for o3, provided by OpenAI.

These results are truly impressive, as they match and sometimes exceed those of top humans. Consider the Codeforces scores, where o3 achieved 2727. That score qualifies o3 for the rank of International Grandmaster, a title reserved for the best competitive programmers who are in the top 0.05%.

The results for the EpochAI Frontier Math benchmark are equally impressive. Each test in this benchmark is designed to require hours of work, even from expert mathematicians. Models like GPT-4 and Gemini could not score more than 2%. o3 scored 25.2%. That’s a massive leap in performance and reasoning capabilities.

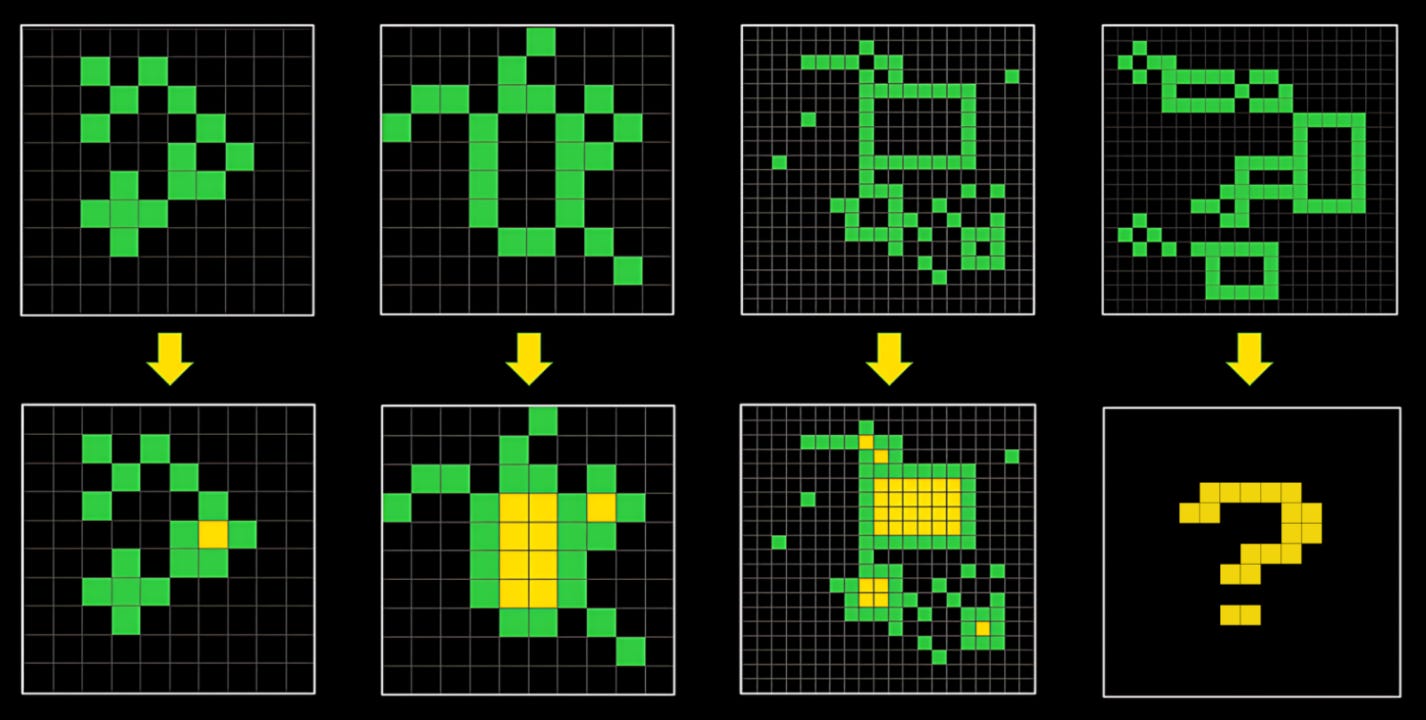

However, there was another benchmark that o3 crushed. ARC-AGI is regarded as one of the toughest benchmarks for assessing the intelligence of AI models. Below is an example of an ARC-AGI test—each test presents examples of a pattern and then requires solving a challenge based on that pattern.

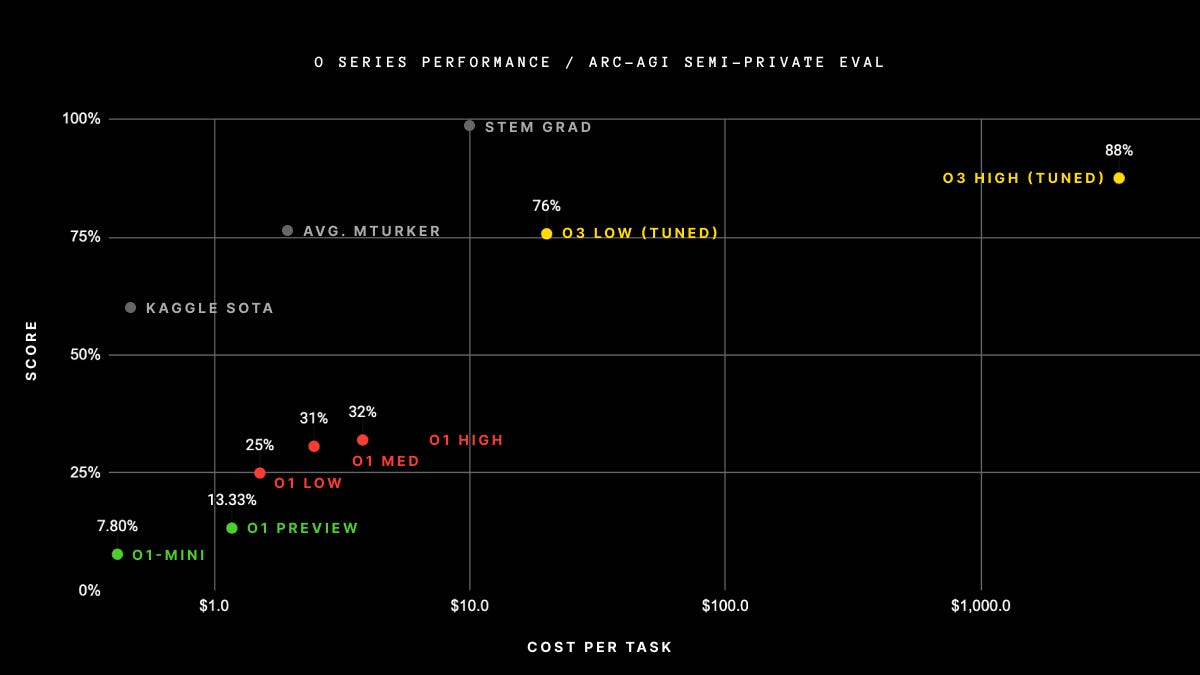

Most humans can easily solve the ARC-AGI tests, but AI models have struggled with them so far. GPT-4o scored only 5%. o1, when run on a high-compute setting, scored 32%. The best open-source project achieved a score of 54.5%.

So, how did o3 score on the ARC-AGI benchmark?

o3 scored 76%, matching the average human score and significantly outperforming both o1 and leading open-source projects. However, that score was achieved on a low-compute setting. On a high-compute setting (approximately 172 times more compute than the low configuration), o3 scored 88%. In the ARC-AGI benchmark, o3 stands in a class of its own, with no competitors coming even remotely close.

OpenAI hasn’t revealed when o3 will be publicly available, but it seems we can expect the release in Q1 of 2025. However, if you are an AI safety researcher, you have an opportunity to help evaluate and identify potential safety and security implications of o3 ahead of its public release.

o3 marks a new chapter in AI research. We now have an AI model capable of matching and sometimes exceeding human experts in tasks requiring high levels of reasoning, such as solving challenging algorithmic or mathematical problems. Moreover, o3 and similar models will continue to improve, bounded only by how much computing power can they use. The difference between o3 in low-computing and high-computing configurations in the ARC-AGI highlights just how much better these models perform when given more time to "think."

Sure, that high-compute run cost OpenAI 172 times more computing power, translating to about $350,000 for that single run. However, it’s worth noting that we will soon see more and larger AI-focused data centres coming online. Additionally, more efficient chips offering greater computing power will become available. What OpenAI paid for that high-compute run might cost just a fraction of those $350,000 in a year or two.

With o3, we might be at the same point as when GPT-4 was released two years ago—a new model redefining the state-of-the-art and unlocking new possibilities. Now we have to wait until o3 is out to see what we can do with it and with what OpenAI competitors will respond.

If you enjoy this post, please click the ❤️ button or share it.

Do you like my work? Consider becoming a paying subscriber to support it

For those who prefer to make a one-off donation, you can 'buy me a coffee' via Ko-fi. Every coffee bought is a generous support towards the work put into this newsletter.

Your support, in any form, is deeply appreciated and goes a long way in keeping this newsletter alive and thriving.

🦾 More than a human

A woman in the US is the third person to receive a gene-edited pig kidney

A 53-year-old woman from the US has become the third person in the world to receive a kidney transplant from a gene-edited pig. The procedure involved making 10 gene edits to prevent organ rejection, excessive growth, and inflammation, followed by placing the edited pig cells into a pig egg cell, which was then implanted in a sow to produce a gene-edited piglet. The woman was discharged from the hospital 11 days after the surgery and will remain in New York for three months for evaluations.

First baby conceived via breakthrough fertility tech born

The first human conceived through an innovative stem cell-based fertility technique has been born. Developed by Gameto, the method allows eggs to mature outside the body by co-culturing ovarian support cells (OSCs) derived from induced pluripotent stem cells (iPSCs) with immature eggs. This approach reduces hormone injections by 80%, shortens treatment cycles to just three days, and minimises risks such as ovarian hyperstimulation syndrome, offering a safer, faster, and less invasive alternative to traditional IVF. The technique has regulatory approval in Australia, Japan, Argentina, Paraguay, Mexico, and Peru, with plans for US trials underway.

🧠 Artificial Intelligence

State-of-the-art video and image generation with Veo 2 and Imagen 3

Shortly after OpenAI released Sora, Google responded by launching a new version of its video generator, Veo 2, alongside the updated version of Imagen 3, Google’s image generator. Google claims that Veo 2 set a new state-of-the-art level of performance, surpassing all competitors, including OpenAI’s Sora and Meta Movie Gen, and represents a significant step forward in high-quality video generation. Both models are now available in VideoFX, ImageFX, and a new Google Labs experiment named Whisk.

NVIDIA Unveils Its Most Affordable Generative AI Supercomputer

Nvidia has launched the Jetson Orin Nano Super, which it calls the most affordable generative AI supercomputer, priced at only $249. This compact computer, small enough to fit in the palm of your hand, delivers 67 INT8 TOPS (tera operations per second) of AI performance and is capable of running many advanced AI models, including large language models. While the Jetson Orin Nano Super is primarily designed for robotics, it is likely to also find applications in edge computing and among makers and hobbyists. I want one too!

Google releases its own ‘reasoning’ AI model

Google has released a new experimental AI model, Gemini 2.0 Flash Thinking Experimental, which, as the name suggests, is capable of “reasoning,” meaning it analyses its answers before presenting the final response to the user. The model is described as “best for multimodal understanding, reasoning, and coding,” with applications in programming, mathematics, and physics. Early tests show promising results in tackling complex problems, though there is still room for improvement. Gemini 2.0 Flash Thinking Experimental is available on Google’s AI Studio platform.

Introducing Phi-4: Microsoft’s Newest Small Language Model Specializing in Complex Reasoning

Microsoft joins the reasoning models party with Phi-4, a 14-billion-parameter small language model (SLM) focused on complex reasoning, especially in math, alongside conventional language tasks. According to benchmarks provided by Microsoft, Phi-4 matches the performance of Llama 3.3 70B and outperforms Google’s Gemini Pro 1.5 on mathematics competition problems. Phi-4 is available on Azure AI Foundry.

GitHub launches a free version of its Copilot

GitHub Copilot, the popular AI pair programming tool, now offers a free version for developers. Previously, Copilot required a subscription starting at $10/month, with free access limited to students, teachers, and open-source maintainers. The free version provides up to 2,000 code completions per month, 50 chat messages per month, and allows users to choose between Anthropic’s Claude 3.5 Sonnet and OpenAI’s GPT-4o models. This free version will be bundled by default with Microsoft’s VS Code editor. The move to make Copilot free aligns with competitors like Tabnine, Qodo, and AWS, which already offer free plans for their AI coding assistants.

The era of open voice assistants has arrived

Home Assistant, an open-source home automation platform, has released the Home Assistant Voice Preview Edition, an open-source, privacy-focused alternative to Amazon Echo for $59. The device allows for extensive customization, from firmware to hardware modifications, and aims to establish a new standard for open, private voice assistant technology, prioritizing user choice and privacy over monetization.

▶️ Safety Alignment Should be Made More Than Just a Few Tokens Deep (48:52)

In this video, Yannic Kilcher analyses a paper titled Safety Alignment Should be Made More Than Just a Few Tokens Deep which, as the title suggests, argues that safety alignment processes primarily adjust the likelihood of the first few tokens in response being malicious, leaving the rest of the model's behaviour unchanged and vulnerable to attacks. The paper suggests some solutions to improve models’ safety while Kilcher suggests that baking safety into next-token prediction might not be a robust long-term solution.

If you're enjoying the insights and perspectives shared in the Humanity Redefined newsletter, why not spread the word?

🤖 Robotics

Waymo to begin testing in Tokyo, its first international destination

Waymo, Alphabet's autonomous vehicle division, has announced plans to begin testing autonomous vehicles in Tokyo in early 2025, making Tokyo the first city outside the US where Waymo operates. The initial phase will involve collaboration with Nihon Kotsu, Japan's largest taxi operator, and the GO taxi app. Drivers will manually operate Waymo's Jaguar I-PACE vehicles to map key areas of Tokyo, including Minato, Shinjuku, and Shibuya. Data collected from the manually operated vehicles will train Waymo’s AI systems to adapt to Tokyo’s unique driving conditions. This partnership is expected to last several quarters, with Waymo planning a long-term presence in Japan.

Boston Dynamics lays off 45 employees, 5 percent of its workforce

Boston Dynamics laid off 45 employees (5% of its workforce), affecting nearly every department in the company. According to the Boston Globe, the layoffs were attributed to the need to streamline operations and support sustainable growth amid higher cash burn rates. As CEO Robert Playter said, the layoffs were necessary for the company’s long-term profitability goals.

Apptronik partners with Google DeepMind to advance humanoid robots with AI

Apptronik has announced a partnership with Google DeepMind. “By combining Apptronik’s cutting-edge robotics platform with the Google DeepMind robotics team’s unparalleled AI expertise, we’re creating intelligent, versatile, and safe robots that will transform industries and improve lives,” said Jeff Cardenas, co-founder and CEO of Apptronik. This collaboration mirrors OpenAI’s partnership with Figure, another humanoid robotics company, which provides Figure with access to ChatGPT and OpenAI’s world-class AI models.

Why It’s Time to Get Optimistic About Self-Driving Cars

In this article, Azeem Azhar from Exponential View shares how his perspective on autonomous taxi services has shifted from scepticism in 2017 to recognising significant progress and the potential for widespread adoption. He highlights examples from China, where government-backed initiatives are enabling robotaxi tests in 16 cities. He also references Waymo, which now provides over 312,000 rides per month in California, including approximately 8,000 daily rides in San Francisco, approaching the 6% market share tipping point for rapid adoption. In both cases, robotaxis outperform human-driven vehicles in daily ride completions, improving operational efficiency and reducing cost per ride.

In the 1970s, the CIA Created a Robot Dragonfly Spy. Now We Know How It Works.

Recently declassified top-secret documents have revealed that in the 1970s, the CIA developed the "insectothopter," a robotic dragonfly designed for covert surveillance during the Cold War. Built at a time when microprocessors were still a new thing, this robotic dragonfly weighed less than a gram and was equipped to carry retroreflector beads for laser-based eavesdropping, a technique that extracts sound from light vibrations. Although the robot was a marvel of engineering, it had its limitations—the insectothopter could only fly for 60 seconds and was highly susceptible to crosswinds. Real-world testing also uncovered difficulties in maintaining laser alignment during flight, making it challenging to operate outside laboratory conditions. While the insectothopter never saw active use, it demonstrated the potential for miniature drones in espionage and inspired the development of more capable robotic systems.

AI infiltrates the rat world: New robot can interact socially with real lab rats

An international team of roboticists has developed a robotic rat designed to interact with real rats. Although the robot has a cart-like body with wheels and only vaguely resembles a rat, it successfully fooled real rats into interacting with it as if it were another rat. This was achieved through an AI trained on videos of real rats to replicate aggressive and playful behaviours observed during social interactions, which then was tuned with positive reinforcement during interactions with real rats allowing the robot to continue learning and adapting over time. The robot could serve as a research tool to study social interactions in real rats.

🧬 Biotechnology

The '4th Wave' of AI Drug Discovery is Here, According to This Report

In this article,

Gold-based drug slows cancer tumor growth by 82%, outperforms chemotherapy

Researchers have developed a new gold-based drug that slowed tumour growth by 82% in animal studies, significantly outperforming the widely used chemotherapy drug cisplatin, which achieved only a 29% reduction. The drug is designed to selectively target thioredoxin reductase, an enzyme abundant in cancer cells, inhibiting cell multiplication and drug resistance. It also prevents the formation of new blood vessels (anti-angiogenesis), a process critical for tumour growth. These findings pave the way for safer, more effective alternatives to conventional chemotherapy, addressing issues such as drug resistance and systemic toxicity.

💡Tangents

▶️ 12,419 Days Of Strandbeest Evolution (21:38)

I’m fascinated by Strandbeest, the walking kinematic sculptures created by Theo Jansen. There is something beautiful about those skeleton-like machines that walk using only wind as their source of power. In this video, Veritasium shares the story of Strandbeest, why Jansen built them, the engineering challenges he faced, and how Strandbeest evolve to become more like living organisms rather than sculptures.

Thanks for reading. If you enjoyed this post, please click the ❤️ button or share it.

Humanity Redefined sheds light on the bleeding edge of technology and how advancements in AI, robotics, and biotech can usher in abundance, expand humanity's horizons, and redefine what it means to be human.

A big thank you to my paid subscribers, to my Patrons: whmr, Florian, dux, Eric, Preppikoma and Andrew, and to everyone who supports my work on Ko-Fi. Thank you for the support!

My DMs are open to all subscribers. Feel free to drop me a message, share feedback, or just say "hi!"

The costs of those o3 runs are worrying. Not just from a resource consumption standpoint, but from an accessibility one too. The cost to access o3's general-purpose, apparently huge power will, of course, reflect its requirements. At this sort of scale, I can imagine wealth gaps widening further as those with endless money to chuck at o3 will do so wherever it can provide to them a competitive advantage, in order to stay at the top - and it looks like it could in a lot of areas. Unless OpenAI plays it carefully, this is exactly what they wanted to avoid to begin with - only the rich having access to the tech that matters.