Google's Agentic Era - Sync #497

Plus: Sora is out; OpenAI vs Musk drama continues; GM closes Cruise; Amazon opens AGI lab; Devin is out; a humanoid robot with artificial muscles; NASA's new Martian helicopter; and more!

Hello and welcome to Sync #497!

It has been an interesting week in AI, with OpenAI finally releasing Sora and Google revealing Gemini 2.0 and its vision for the agentic era, which we will examine more closely in this week’s issue of Sync.

Elsewhere in AI, the feud between OpenAI and Elon Musk continues. This time, OpenAI responded with its side of the story, revealing that Musk supported OpenAI becoming a for-profit company. In other news, Devin is out, Amazon has opened an AGI lab, and every other top AI company received poor grades on safety.

In robotics, GM suddenly pulled the plug on Cruise and will absorb the robotaxi company. Meanwhile, NASA plans a bigger Martian helicopter as a follow-up to Ingenuity, the first flying machine on another planet. We will also see a drone that leaps into the air like a bird and a humanoid robot powered by pneumatic artificial muscles.

Additionally, we will meet a neurotech company that implants brain-computer interfaces without brain surgery and learn why the idea of designer babies and selecting “genes for X” is not likely to happen anytime soon.

Enjoy!

Google's Agentic Era

A year ago, Google unveiled Gemini, its family of natively multimodal large language models in three sizes: Nano, Pro, and Ultra. A couple of months later, in February 2024, Google released Gemini 1.5, which introduced improvements in performance and a larger context window, enabling these models to process more input data than before. In May 2024, the Gemini family expanded with Gemini 1.5 Flash—a lightweight model designed for greater speed and efficiency.

Last week, Google launched the next generation of Gemini models—Gemini 2.0—and outlined its vision for the agentic era.

Google introduces Gemini 2.0 Flash

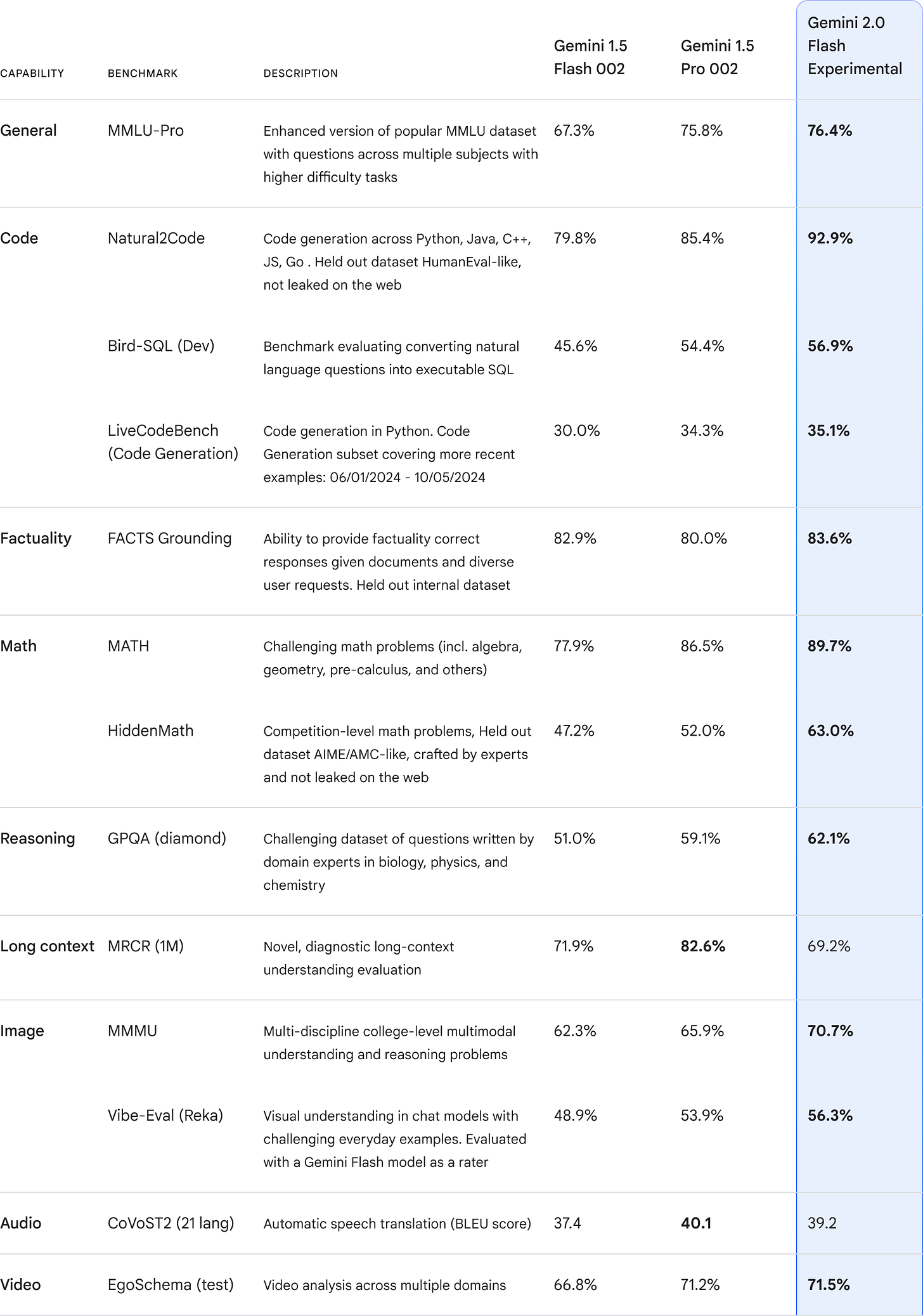

The first model revealed from the Gemini 2.0 family is Gemini 2.0 Flash. According to benchmarks provided by Google, the new Gemini 2.0 Flash, a lightweight model in the Gemini 2.0 family, already outperforms Gemini 1.5 Pro and generates responses twice as quickly.

I remind you to take any first-party benchmarks with a grain of salt, as the companies releasing them aim to present their latest models in the best possible light. In the case of Gemini 2.0 Flash, the numbers look promising, but where are the comparisons to other models, such as GPT-4o, GPT-4o Mini, Anthropic’s Claude 3.5 Haiku and Sonnet, or Meta’s Llama 3?

In addition to supporting multimodal inputs such as images, video, and audio, Gemini 2.0 Flash now supports multimodal output, including natively generated images combined with text and steerable text-to-speech multilingual audio. It can also natively call tools like Google Search, execute code, and use third-party user-defined functions.

Gemini 2.0 Flash is available as an experimental model to developers via the Gemini API in Google AI Studio and Vertex AI. Multimodal input and text output are accessible to all developers, while text-to-speech and native image generation are available to early-access partners. General availability is scheduled for January, along with additional model sizes.

Gemini 2.0 Flash is also available in the Gemini app, Google’s AI assistant and equivalent to OpenAI’s ChatGPT.

Google enters the agentic era

With the release of Gemini 2.0, Google has clearly outlined its plan for AI, which includes enhancing all Google apps and services with AI and advancing the development of AI agents.

The first part of Google’s AI plan is already underway. Various Google apps and services, from Search to Gmail and even Maps, are receiving generative AI updates and new features, all powered by Gemini models.

The second part of Google’s AI plan—AI agents—is still in development but Google has shown a glimpse of how it envisions the agentic era to look.

The first version of Google’s agentic AI agents will most likely look like the recently revealed Project Mariner. Project Mariner is a Google Chrome extension featuring an AI chatbot that can process complex requests, break them into smaller tasks, and complete them by taking control of the user’s browser.

Project Mariner is currently available only to trusted testers, with no information yet on when it will be publicly available.

One interesting detail I noticed during the Project Mariner demo is the glowing blue edges, which indicate that the AI has taken control of the screen. It seems this is how tech companies plan to signal when AI is in control. Apple demonstrated something similar earlier this year with glowing edges appearing during interactions with Siri.

Another example of the first wave of Google’s AI agents is Deep Research. This mode in the Gemini app handles the heavy lifting of doing research, analysing results, and preparing a report on your behalf. Additionally, there is Jules, an AI coding assistant similar to GitHub Copilot or Devin, which allows you to describe a new feature or bug, and the assistant writes the code for you.

However, Google does not intend to stop at chatbots. Its vision is to develop highly capable AI assistants that can comprehend complex scenarios, devise multi-step plans, and execute them on behalf of the user. If Project Mariner is a showcase of what we can have now, then Project Astra is painting a picture of what is possible.

First introduced at the Google I/O 2024 event, Project Astra is what Google describes as a universal AI assistant, designed to serve as a personal assistant for everyday life. The demos shown so far envision an app that integrates multiple sources of information—photos, videos, audio, and geolocation—to provide quick and accurate responses to user questions.

With the release of Gemini 2.0, Project Astra can now converse in multiple languages and mixed languages, demonstrating an improved understanding of accents and uncommon words. It also has now up to 10 minutes of in-session memory and can recall previous conversations. Additionally, Project Astra now has the ability to utilise other apps like Google Search, Maps, or Lens, while delivering faster responses.

Google has not announced a release date for Project Astra. The company has only stated that it is expanding the trusted tester program to include more participants, including a small group that will soon begin testing Project Astra on prototype glasses.

Google’s vision for its agentic era is interesting. However, what we have so far are mostly polished demos and promises of possibly releasing these tools for widespread use at some point in the future. The true test of Google’s AI agents will be whether they prove useful in everyday life or remain as interesting tech demos in search of practical applications.

If you enjoy this post, please click the ❤️ button or share it.

Do you like my work? Consider becoming a paying subscriber to support it

For those who prefer to make a one-off donation, you can 'buy me a coffee' via Ko-fi. Every coffee bought is a generous support towards the work put into this newsletter.

Your support, in any form, is deeply appreciated and goes a long way in keeping this newsletter alive and thriving.

🦾 More than a human

How Neuralink’s chief competitor is tapping into the brain without surgery

Meet Synchron, a neurotech company developing a brain-computer interface (BCI) that does not require brain surgery to implant. Instead, the device is inserted via the jugular vein, which runs up the neck into the brain, using a catheter. Once in place, the implant unfolds and begins reading brain signals. Synchron has conducted successful trials in Australia and the US, and the company’s BCI has received a Breakthrough Device designation from the FDA, recognising its potential to address debilitating conditions. In 2025, Synchron plans to conduct a new trial with a commercially available system and aims to expand its applications to treat other brain and nervous system conditions.

Brain Stimulation Helps People Walk After Spinal Injury

In a groundbreaking study, Swiss researchers used deep brain stimulation (DBS) to improve walking ability in two patients with long-standing incomplete spinal cord injuries. Building on initial research that mapped brain activity in mice recovering from spinal cord injuries, scientists identified an unexpected target—the lateral hypothalamus, a region previously associated with functions like feeding, reward processing, and arousal, but not locomotion. When DBS was applied to the lateral hypothalamus in human patients, there was an immediate improvement in walking ability, with positive effects persisting even after the electrodes were turned off. The study has been widely praised, with experts calling it a "tour de force" for its potential to transform neurosurgery, neurobiology, and rehabilitation.

Those designer babies everyone is freaking out about – it’s not likely to happen

Whenever the topic of designer babies is raised, the same concerns about selecting for traits like eye colour, intelligence, and athleticism follow shortly. However, as this article argues, designing babies with specific traits remains scientifically improbable due to the complexity of genetics. The reality of genetics is much more complex than a “single gene for X” and editing DNA to achieve "desirable" traits can have unforeseen side effects, such as increased risks for other diseases. The author stresses the importance of ethical and responsible use of genetic technologies, cautioning against sensationalised claims or exploitative practices that misrepresent the realistic capabilities of science.

🧠 Artificial Intelligence

Sora is here

OpenAI has finally made Sora, its video generation model, publicly available (except in the United Kingdom, Switzerland, and the European Economic Area, where it is not yet accessible). Sora is included in the ChatGPT Plus subscription, allowing up to 50 videos at 480p resolution or fewer at 720p per month. The Pro plan provides 10 times more usage, higher resolutions, and longer durations. OpenAI acknowledges that the current version of Sora has limitations, including unrealistic physics and difficulties with complex or prolonged actions. For safety, OpenAI ensures that all videos generated by Sora are watermarked with C2PA metadata. Additionally, it is actively blocking harmful misuse, such as child sexual abuse materials and sexual deepfakes.

Elon Musk wanted an OpenAI for-profit

The feud between OpenAI and Elon Musk continues. About a month ago, Musk’s legal team released a series of emails between Musk and OpenAI leaders, attempting to portray Musk as the betrayed party. Now, OpenAI has responded with its side of the story. According to OpenAI, Musk supported the idea of transitioning to a for-profit structure, even creating a for-profit as OpenAI’s proposed new structure. However, OpenAI leadership rejected Musk’s terms, which would have given him control over the company, leading to Musk’s departure. In its statement, OpenAI calls for Musk to stop legal battles, stating that both OpenAI and xAI, Musk’s AI company, should “be competing in the marketplace rather than the courtroom.”

Devin is now generally available

Devin, an AI software developer, has been made publicly available. For $500 per month, Cognition AI offers this tool, which, based on Cognition's recommendations for its use, aims to replace junior engineers or handle relatively simple coding tasks.

Anthropic’s 3.5 Haiku model comes to Claude users

Claude 3.5 Haiku is now available on the Claude platform for both web and mobile users. According to Anthropic, Claude 3.5 Haiku outperforms or matches the company’s previous flagship model, Claude 3 Opus, on certain benchmarks, and excels in tasks such as coding recommendations, data extraction and labelling, and content moderation. The model can produce longer text outputs and has an updated knowledge cutoff, but lacks the ability to process images.

Amazon opens new AI lab in San Francisco focused on long-term research bets

Amazon opens Amazon AGI SF Lab, a San Francisco-based team focused on developing AI agents capable of performing actions both in the digital and physical worlds. The lab, which is seeded by members recently hired (or absorbed) from Adept, will combine startup-like agility with Amazon’s resources. Each team in the lab will have the autonomy to move fast and the long-term commitment to pursue high-risk, high-payoff research. As David Luan, the head of the newly formed lab, writes, the team is particularly excited to work on work in combining large language models with reinforcement learning to solve reasoning and planning, learned world models, and generalizing agents to physical environments.

Leading AI Companies Get Lousy Grades on Safety

Future of Life Institute has released a report grading leading AI companies on their risk assessment efforts and safety procedures. Anthropic earned the highest grade—a C—highlighting the need for industry-wide improvement. “The purpose of this is not to shame anybody,” says Max Tegmark, an MIT physics professor and president of the Future of Life Institute. “It’s to provide incentives for companies to improve.”

Apple reportedly developing AI server chip with Broadcom

The Information reports Apple is working with Broadcom to develop its first AI-focused server chip, known internally as Baltra. Broadcom's role involves focusing on networking technology essential for connecting devices to networks for AI processing. The design of the chip is expected to be completed within 12 months. If the report is true, Apple will join other tech giants, such as Google, Amazon, Microsoft and Meta, in designing their own AI chips.

GenCast predicts weather and the risks of extreme conditions with state-of-the-art accuracy

Google DeepMind has released GenCast, a weather-predicting AI model capable of forecasting weather up to 15 days in advance with greater accuracy than any existing system. According to researchers, a single Google Cloud TPU v5 takes just 8 minutes to generate a 15-day forecast, compared to the hours required by supercomputers with tens of thousands of processors. Google DeepMind has made GenCast fully open, sharing both code and weights to support the wider weather forecasting community.

How Mark Zuckerberg has fully rebuilt Meta around Llama

With the release of Llama, an open large language model, Meta has become an unlikely champion and leader of the open AI community. This article takes us behind the closed door at Meta and shares the internal discussions between the proponents of an open approach, which includes Yann LeCun, Meta’s chief AI scientist, and Joelle Pineau, VP of AI research and head of Meta’s FAIR (Fundamental AI Research), and those opposed to making Llama freely available. According to this story, a key figure in defining Meta’s AI strategy was Mark Zuckerberg, who made the final decision to release Llama 2 as an open model.

▶️ Inside a MEGA AI GPU Server with the NVIDIA HGX H200 (19:35)

Patrick from ServeTheHome got his hands on the Nvidia HGX H200, an AI-focused computing powerhouse featuring eight Nvidia H200 GPUs with a combined 1.128 terabytes of memory and delivering 32 petaFLOPS of AI performance. In this video, Patrick opens up the server, walks us through its components in detail, and puts it through various benchmarks, including power consumption tests. It is always fascinating to get an up-close look at the hardware powering modern AI models and see how those AI computing monsters are built.

If you're enjoying the insights and perspectives shared in the Humanity Redefined newsletter, why not spread the word?

🤖 Robotics

Cruise employees ‘blindsided’ by GM’s plan to end robotaxi program

GM announced it would no longer fund Cruise, its self-driving car subsidiary, and would absorb the company into its own efforts to develop autonomous personal vehicles. This decision surprised everyone, including Cruise employees, who learned of it through a Slack message and a brief all-hands meeting. GM acquired Cruise in 2016 with high hopes, projecting $50 billion in annual revenue from robotaxis by 2030. However, delays in commercializing autonomous vehicles, coupled with regulatory and operational setbacks, derailed these plans. A key blow came in October 2023, when Cruise lost its permits to operate in California following a high-profile robotaxi incident, leading to layoffs and GM taking direct control. Compounding the challenges, GM scrapped Cruise’s custom-built Origin robotaxi in 2024, choosing to use the Chevrolet Bolt for autonomous development, marking a significant departure from the ambitious original robotaxi model.

ANYbotics raises $60M to expand inspection robot deployments worldwide

Swiss robotics company ANYbotics has raised $60 million to support its global and US expansion and scale its operations. The company offers ANYmal, a four-legged robot designed to automate inspections in challenging environments across industries such as energy, mining, and chemicals, addressing labour shortages and enhancing safety. With a total of $130 million raised, ANYbotics is competing with companies like Boston Dynamics and Unitree in the field of four-legged inspection robots for industrial customers.

▶️ NASA’s Mars Chopper Concept (0:37)

Following the massive success of Ingenuity, the first flying machine operating on another planet, NASA is planning to raise the bar by designing, building and sending to Mars a much bigger helicopter. Named Mars Chopper, the project is in the early conceptual and design stages. Beyond scouting, such a helicopter could carry scientific instruments to study terrains that rovers are unable to reach.

AI Company That Made Robots For Children Went Bust And Now The Robots Are Dying

Embodied, the maker of Moxie, an AI-powered social robot for autistic children, is shutting down, leaving parents to explain to their children why their $799 robotic companion will no longer function. Moxie relied on cloud-based large language models, making its operation unsustainable without the company. The closure highlights the fragility of cloud-dependent AI products and raises ethical concerns about the sustainability of such technology and the emotional impact of human-robot interactions.

▶️ Watch this bird-inspired robotic drone leap into the air (2:01)

Meet RAVEN (short for Robotic Avian-inspired Vehicle for multiple ENvironments), a hybrid between a drone and a robotic bird. Using its bird-like legs, RAVEN can walk and hop like a bird. And like a bird, it can also use its legs to leap into the air and then fly like a drone aeroplane. The team behind RAVEN believes the design principles used to build RAVEN could inspire the creation of even more versatile robots.

▶️ Torso 2 by Clone with Actuated Abdomen (1:02)

The humanoid robot developed by Clone Robotics is one of the most unique designs I’ve ever seen. Instead of electric motors, Clone Robotics uses artificial muscle fibres to move its body, and this video demonstrates how the robot moves using these artificial muscles. For this demonstration, the team is using pneumatic actuators with off-the-shelf valves. The final product will use hydraulic actuators and be much quieter.

💡Tangents

Intel on the Brink of Death

The last decade was not good for Intel. The company has lost its leading position in the processor market, completely missed the AI wave and is failing behind AMD, Nvidia and TSMC, its main competitors. This article from SemiAnalysis documents the 10 years of bad decisions and failures that put Intel in the situation where it is now. It also highlights Intel’s board role in the downfall of Intel—choosing bad CEOs one after another, deprioritising innovation in favour of financial engineering and the years of cultural rot it created. The article concludes by urging Intel to focus on its fab business, suggesting that this is the company’s best chance for survival.

Thanks for reading. If you enjoyed this post, please click the ❤️ button or share it.

Humanity Redefined sheds light on the bleeding edge of technology and how advancements in AI, robotics, and biotech can usher in abundance, expand humanity's horizons, and redefine what it means to be human.

A big thank you to my paid subscribers, to my Patrons: whmr, Florian, dux, Eric, Preppikoma and Andrew, and to everyone who supports my work on Ko-Fi. Thank you for the support!

My DMs are open to all subscribers. Feel free to drop me a message, share feedback, or just say "hi!"