Cracks in the Scaling Laws - Sync #493

Plus: OpenAI's new AI agent; AlphaFold3 is open-source... kind of; Amazon releases its new AI chip; Waymo One is available for everyone in LA; how can humanity become a Kardashev Type 1 civilization

Hello and welcome to Sync #493!

This week, we will take a closer look at the leaks emerging from leading AI labs, suggesting that researchers are hitting a wall, and explore what can be done to overcome it.

Elsewhere in AI, OpenAI is reportedly planning to release a new AI agent capable of controlling users’ computers. Meanwhile, Anthropic has teamed up with Palantir and AWS to sell its AI models to defence customers, X is testing a free version of its AI chatbot Grok, and AI Granny is wasting scammers’ time.

In robotics, Waymo One is now available to everyone in LA, Agility Robotics’ Digit has found a new job, and a drone has successfully delivered blood samples between two hospitals in London.

Additionally, AlphaFold3 is now open source (at least the code is), one researcher has demonstrated how a cluster of human brain cells learned to control a virtual butterfly, and YouTube’s chief mad biologist has found a way to turn shrimps into fabric.

Enjoy!

Cracks in the Scaling Laws

If someone were to write a history book about generative AI and large language models, two milestones in AI research would probably get their own chapters. The first milestone happened in 2017 when eight Google Brain researchers published a paper titled Attention Is All You Need. It was a landmark paper which introduced the concept of transformer, the bedrock of all modern large language models. The second chapter would be dedicated to a pair of events—the launch of ChatGPT in November 2022 and the release of GPT-4 in March 2023. That’s when the idea of large language models entered the global scene and kickstarted the AI revolution we are in today.

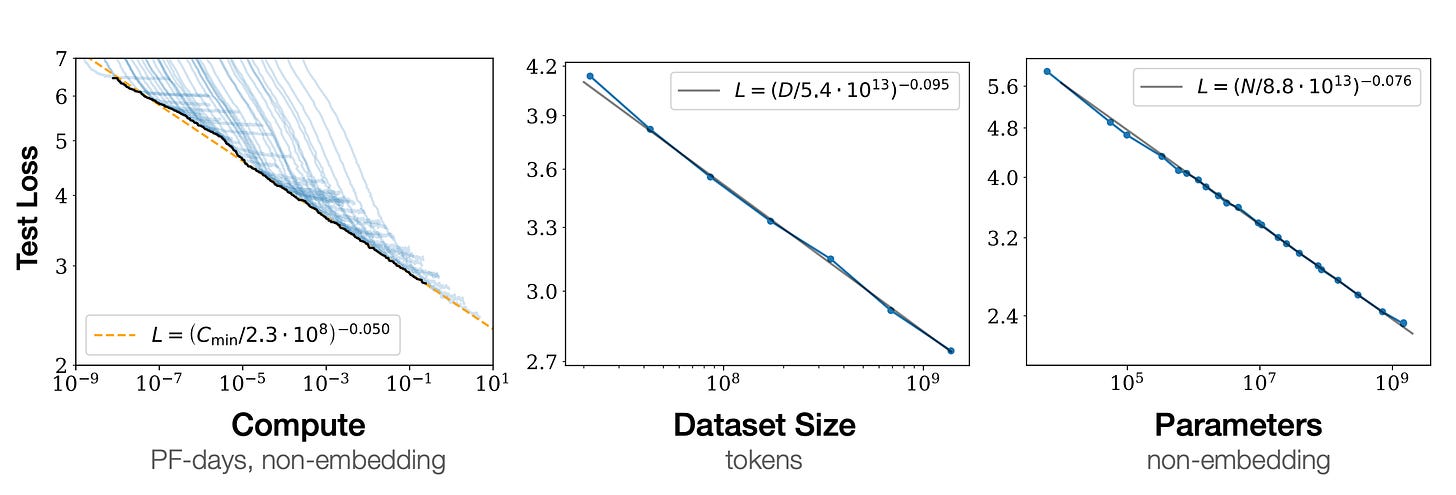

However, between these two milestones, there was another event that bridged them. That event was the publication of a paper from OpenAI titled Scaling Laws for Neural Language Models, published in January 2020. Essentially, what researchers have found is that the performance of a large language model scales up predictably depending on the allocated compute power, training dataset size or model’s parameters.

Later follow-up papers from OpenAI and DeepMind confirmed the original results and expanded upon them. For OpenAI, the discovery of scaling laws was a green light for fully committing to large language models. If the scaling laws hold, then with enough computing power, a big enough training dataset and with big enough model, a highly capable, nearing human-level performance AI model is within reach.

And OpenAI went full-on with large language models. Five months after the Scaling Laws for Neural Language Models paper was published, in May 2020, Microsoft agreed to build what was at that time one of the largest supercomputers, containing 10,000 GPUs, just for OpenAI to train massive models. That set off the AI industry on the path it is now—building more and more powerful supercomputers, fed with ever-increasing amounts of data to train larger and larger, and more capable, models.

That trend, of increasing resources thrown at the model and getting better results, held on. We’ve seen significant leaps in performance with each new generation of large language models. This fueled optimism and raised expectations for the next generation of AI models—GPT-5, Gemini 2, and Claude 4—which are now anticipated to reach entirely new levels of performance, massively surpassing their predecessors.

That, however, seems to not be the case. According to a report from The Information, OpenAI’s new model, codenamed Orion, shows better performance than GPT-4 but not as much as expected. Reportedly, the jump in performance is smaller than from GPT-3 to GPT-4. And OpenAI is not the only company experiencing this slowdown in the rate of improvements—The Verge reports that a similar thing is happening at Google DeepMind, too, saying that Gemini 2 “isn’t showing the performance gains the Demis Hassabis-led team had hoped for.”

That puts leading AI companies—OpenAI, Google DeepMind, Anthropic—in a tricky spot. Over the last two years, these companies have consistently delivered groundbreaking performance with each new model release. However, the reported gains are now said to be smaller than before. If the leaks are accurate, GPT-5 and Gemini 2 will be better than their predecessors but they won’t live up to the hype coming from being the next major upgrade.

The report from The Information suggests that OpenAI is looking into finding new ways of improving the performance of its next-generation model in the face of a dwindling supply of new training data. This involves new strategies such as using synthetic data produced by AI models or new ways to improve model post-training.

But there is also another path forward—adding new functionalities instead of massively improving the performance of next-generation models. That way AI companies can deliver new value to their customers without delivering a new level of raw performance. We already see this strategy in action. In September, OpenAI released o1, their first reasoning model. In October, Anthropic revealed Computer use which allows Claude to take control of user’s computer and perform actions on their behalf. OpenAI and Google are rumoured to introduce a similar feature, too, very soon.

Either way, the leading AI companies have to now justify the billions of dollars poured into them. One way or another, they have to find a way to overcome the wall they are facing.

If you enjoy this post, please click the ❤️ button or share it.

Do you like my work? Consider becoming a paying subscriber to support it

For those who prefer to make a one-off donation, you can 'buy me a coffee' via Ko-fi. Every coffee bought is a generous support towards the work put into this newsletter.

Your support, in any form, is deeply appreciated and goes a long way in keeping this newsletter alive and thriving.

🔮 Future visions

▶️ How Can Humanity Become a Kardashev Type 1 Civilization? (22:18)

The Kardashev Scale is a theoretical framework that measures a civilisation's technological advancement based on its ability to harness and utilise energy, ranging from planetary (Type I) to stellar (Type II) and galactic (Type III) levels. In this video, Matt O'Dowd from PBS Space Time explains the Kardashev Scale, explores where humanity currently stands on it, and discusses how can we become a Type I civilization.

🧠 Artificial Intelligence

OpenAI Nears Launch of AI Agent Tool to Automate Tasks for Users

Bloomberg reports that OpenAI is preparing to launch a new AI agent codenamed “Operator” that can use a computer to take actions on a person’s behalf, such as writing code or booking travel. According to the report, OpenAI plans to release Operator in January as a research preview and make it available to developers through its API. Operator is part of a broader trend in the AI industry to create agents capable of taking control of a user’s computer. Anthropic has already released Computer Use, and Google’s upcoming model, Gemini 2.0, is rumoured to have similar capabilities.

Amazon ready to use its own AI chips, reduce its dependence on Nvidia

Amazon is gearing up to release its new AI chip, Trainium 2, in December. Trainium 2 is aimed at training large AI models and is already being tested by companies like Anthropic, Databricks, Deutsche Telekom, and Ricoh. Amazon claims Trainium 2 delivers four times the performance of Trainium 1, but avoids direct comparisons with Nvidia. The new chip is being made by Annapurna Labs, which was acquired by Amazon in 2015.

OpenAI to present plans for U.S. AI strategy and an alliance to compete with China

OpenAI has proposed a blueprint for US AI strategy and painting AI as a transformative technology comparable to electricity, with the potential for widespread economic and societal benefits. The blueprint proposes the creation of AI economic zones, leveraging nuclear power expertise, and significant public-private investments to establish robust AI infrastructure in the US, with a particular focus on competing with China’s advancements in AI and nuclear power. Additionally, the plan envisions a North American AI alliance, substantial job creation, GDP growth, and integration of AI with local industries.

X is testing a free version of AI chatbot Grok

Some X users have noticed in recent days that the company is testing a free version of their AI chatbot, Grok, in certain regions. Currently, access to Grok is limited only to the Premium and Premium+ subscribers. However, there will be some limits for free users—10 queries per two hours with the Grok-2 model, 20 queries per two hours with the Grok-2 mini model, and three image analysis questions per day. Additionally, to use Grok for free, accounts must be at least seven days old and linked to a phone number.

Anthropic teams up with Palantir and AWS to sell AI to defense customers

Anthropic is teaming up with Palantir and Amazon Web Services (AWS) to bring its Claude AI models to US intelligence and defence agencies. The Claude family of AI models is now integrated into Palantir’s defense-accredited Impact Level 6 (IL6) environment, hosted on AWS, which enables Claude to be used in classified government systems. Anthropic joins other AI vendors, such as Meta with its Llama models and OpenAI, in pursuing US defence partnerships.

Even Microsoft Notepad is getting AI text editing now

Everything seems to be getting some form of AI update these days. Even Microsoft Notepad, the simple text editor is getting an AI-powered feature, called Rewrite. Rolling out in preview to Windows Insiders, the feature allows users to use AI to “rephrase sentences, adjust tone, and modify the length of your content,” according to the Windows Insider Blog. Microsoft Paint is also getting new AI features: the Generative Fill feature enables users to make additions to an image based on a prompt, while Generative Erase removes parts of an image and seamlessly blends the empty space.

Newest Google and Nvidia Chips Speed AI Training

This article details the latest test results of the MLPerf v4.1 benchmark, which evaluates AI hardware performance across six AI tasks: recommendation systems, GPT-3 and BERT-large pre-training, Llama 2 70B fine-tuning, object detection, graph node classification, and image generation. The benchmark featured the first results for Nvidia’s next-generation Blackwell architecture. The B200 GPU demonstrated double the performance of the H100 for GPT-3 training and LLM fine-tuning, along with a 64% improvement in recommendation systems and a 62% improvement in image generation. The benchmark also included Google’s latest AI accelerator, Trillium, which achieved up to a 3.8-fold performance improvement over the v5e TPU, an 8% improvement in GPT-3 training over the v5p TPU, and a two-minute reduction in training time. Overall, the results show that AI training performance is improving at twice the rate of Moore’s Law and advances in software and network scaling enable near-linear scaling with larger systems. However, energy consumption is a growing concern, as this is the first time MLPerf starting to track power usage.

Meta is reportedly working on its own AI-powered search engine, too

According to a report from The Information, Meta does not want to be left behind and is also building an AI-powered search engine. The company joins others, including Microsoft, Google, OpenAI, and Perplexity, in building AI-powered search engines.

EU AI Act: Draft guidance for general purpose AIs shows first steps for Big AI to comply

The European Union has published a draft Code of Practice for General-Purpose AI (GPAI) providers under the AI Act. The Code offers guidance for compliance with the AI Act, targeting GPAI makers like OpenAI, Google, Meta, Anthropic, and others. The Code focuses on ensuring transparency, safety, and risk mitigation for powerful AI systems, particularly those deemed to pose systemic risks. It aims to address compliance challenges while accommodating providers of various sizes, including SMEs and open-source projects. Feedback on the draft is open until November 28, 2023, with the final version expected by May 1, 2025.

‘AI Granny’ is happy to talk with phone scammers all day

This is one of the most beautiful uses of AI I’ve seen in recent months. O2 has released dAIsy, an AI Granny with infinite patience whose only job is to waste scammers’ time by being the most polite and annoying for scammers artificial grandma it can be. It would be interesting to see a follow-up in a few months to check if dAIsy has been effective or if this is simply a PR stunt to raise awareness about AI-powered phone scams.

Google DeepMind has a new way to look inside an AI’s “mind”

One area of AI research is mechanistic interpretability, which focuses on reverse-engineering how neural networks make decisions by analysing their internal workings. This article highlights work done by researchers at Google DeepMind to better understand the inner workings of AI. Using sparse autoencoders, which examine neural network layers, researchers can identify features (data categories representing broader concepts) and track their progression from input to output. Mechanistic interpretability could help ensure AI systems align with human intentions and values, paving the way for more trustworthy and safer AI by enabling precise control over their operations.

Oasis: A Universe in a Transformer

This is a very interesting project. Oasis is a video game in which every frame is generated by an AI model. There is an online playable demo, and the model and its weights are available for download to run the AI-generated version of Minecraft on your computer. I tried it and although it looks and feels like Minecraft, the model didn’t fully replicate Minecraft and the game was a little bit off. Nevertheless, Oasis is impressive and could offer a glimpse into how games of the future might be created. It also shows the potential generative AI has to generate whole virtual worlds not only for gaming but also for simulations and training robots.

If you're enjoying the insights and perspectives shared in the Humanity Redefined newsletter, why not spread the word?

🤖 Robotics

Waymo One is now open to all in Los Angeles

Waymo One, Alphabet’s robotaxi service, is now open for everyone to use in Los Angeles, marking its largest expansion yet. The service was previously available to a select group of users who, according to the company’s press release, rated it highly. Los Angeles is the third city where the company’s robotaxi service is now fully available, following San Francisco and Phoenix.

Schaeffler plans global use of Agility Robotics’ Digit humanoid

Agility Robotics’ Digit has found another job. Schaeffler AG, a global leader in motion technology, has made a minority investment into Agility and is buying an undisclosed number of Agility Robotics humanoid robots for use across its global plant network. The companies did not disclose the size of the investment or what the robots will be used for.

▶️ Drone delivery of blood samples between London hospitals (4:49)

In this video, Wing, Alphabet’s drone delivery company, and Apian, a UK drone delivery startup specialising in medical deliveries, demonstrate how drones are used to transport blood samples between two hospitals in London. The deliveries are part of a six-month trial to assess the feasibility of using drones for medical logistics in London and across the UK.

Researchers use imitation learning to train surgical robots

Researchers have successfully trained a surgical robot to execute procedures as skillfully as human surgeons by using hundreds of surgical videos as training data. The robot learned to perform three fundamental surgical tasks: manipulating a needle, lifting body tissue, and suturing. It also demonstrated adaptability, such as picking up a dropped needle without specific instructions. This approach eliminates the need to program each step manually, though there is still a long way to go before autonomous surgical robots become a reality.

This Is a Glimpse of the Future of AI Robots

Meet Physical Intelligence, a robotics startup aiming to make household robots a reality. The company has developed a foundational model called π0 (pi-zero), enabling robots to perform a wide range of household chores. Robots trained with π0 have performed tasks such as folding laundry, retrieving clothes from a dryer, cleaning a cluttered table, and building cardboard boxes. While π0 is not yet perfect, it paves the way for capable and practical household robots.

🧬 Biotechnology

AI protein-prediction tool AlphaFold3 is now open source

Six months after revealing AlphaFold3 to the world, Google DeepMind is finally open-sourcing its latest protein-predicting model. Well, kind of open-sourcing. DeepMind has made the code for AlphaFold3 available on GitHub for everyone to view and download. However, the model parameters have not been made publicly available. DeepMind will provide the parameters exclusively for academic use and prohibits commercial use of AlphaFold3.

Lab-grown human brain cells drive virtual butterfly in simulation

Using Finalspark’s Neuroplatform, a software engineer created a virtual butterfly controlled by human brain organoids—lab-grown human brain cells. The project demonstrates the potential of biological neural networks (BNNs), which promise to consume far less energy than artificial neural networks (ANNs) while being more adaptive and better at zero-shot learning and pattern recognition.

Stem Cell Transplant 'Black Box' Unveiled in 31-Year Study of Blood Cells

Researchers from the Wellcome Sanger Institute, in collaboration with the University of Zurich, have published a detailed study tracing the long-term behaviour of stem cells post-transplant. The study found that transplants from younger donors—typically in their 20s or 30s—resulted in around 30,000 stem cells surviving long-term, while older donors had only 1,000 to 3,000 surviving stem cells. These results offer new insights into improving stem cell transplant procedures, which are used as treatments for blood cancers such as leukaemia and lymphoma.

▶️ Turning Shrimp into Woven Fabric (16:04)

The Thought Emporium, YouTube’s chief mad biologist, shows in this video how to turn shrimp into fabric. It sounds like an insane idea, but it’s less far-fetched than it seems.

Thanks for reading. If you enjoyed this post, please click the ❤️ button or share it.

Humanity Redefined sheds light on the bleeding edge of technology and how advancements in AI, robotics, and biotech can usher in abundance, expand humanity's horizons, and redefine what it means to be human.

A big thank you to my paid subscribers, to my Patrons: whmr, Florian, dux, Eric, Preppikoma and Andrew, and to everyone who supports my work on Ko-Fi. Thank you for the support!

My DMs are open to all subscribers. Feel free to drop me a message, share feedback, or just say "hi!"

Really amazing stories and videos in this post!