Claude can now control your computer - Sync #490

Plus: OpenAI plans to release Orion by December; DeepMind open-sources SynthID-Text; Tesla has been secretly testing Robotaxi; US startup that screen embryos for IQ; Casio's robot pet; and more!

Hello and welcome to Sync #490!

It’s been a big week for Anthropic. The AI company released two new models and a very interesting tool that allows an AI agent take control over user’s computer. We will take a closer look at both things in the editorial section of this week’s issue of Sync.

Elsewhere in AI, rumours have emerged that OpenAI is planning to release a new model, codenamed Orion, by December. Meanwhile, Google DeepMind has open-sourced SynthID-Text to help watermark and detect AI-generated text, Perplexity is looking to fundraise at an $8B valuation, and Apple employees believe the upcoming Apple Intelligence is two years behind its competitors.

In robotics, Tesla has been secretly testing Robotaxi service for a most of the year, a humanoid robot gets up off the ground like no human would and Casio thinks an AI-powered robotic furball will replace your pet.

Also in this issue of Sync is included a story about a US startup that charges couples for screening embryos for IQ and other traits, scientists created a smart insulin that can automatically switch on and off depending on glucose levels in the blood, and what our posthuman descendants might look like.

Enjoy!

Claude can now control your computer

This week, Anthropic dropped two new things.

The first thing are two new models—the upgraded Claude 3.5 Sonnet and a brand new Claude 3.5 Haiku.

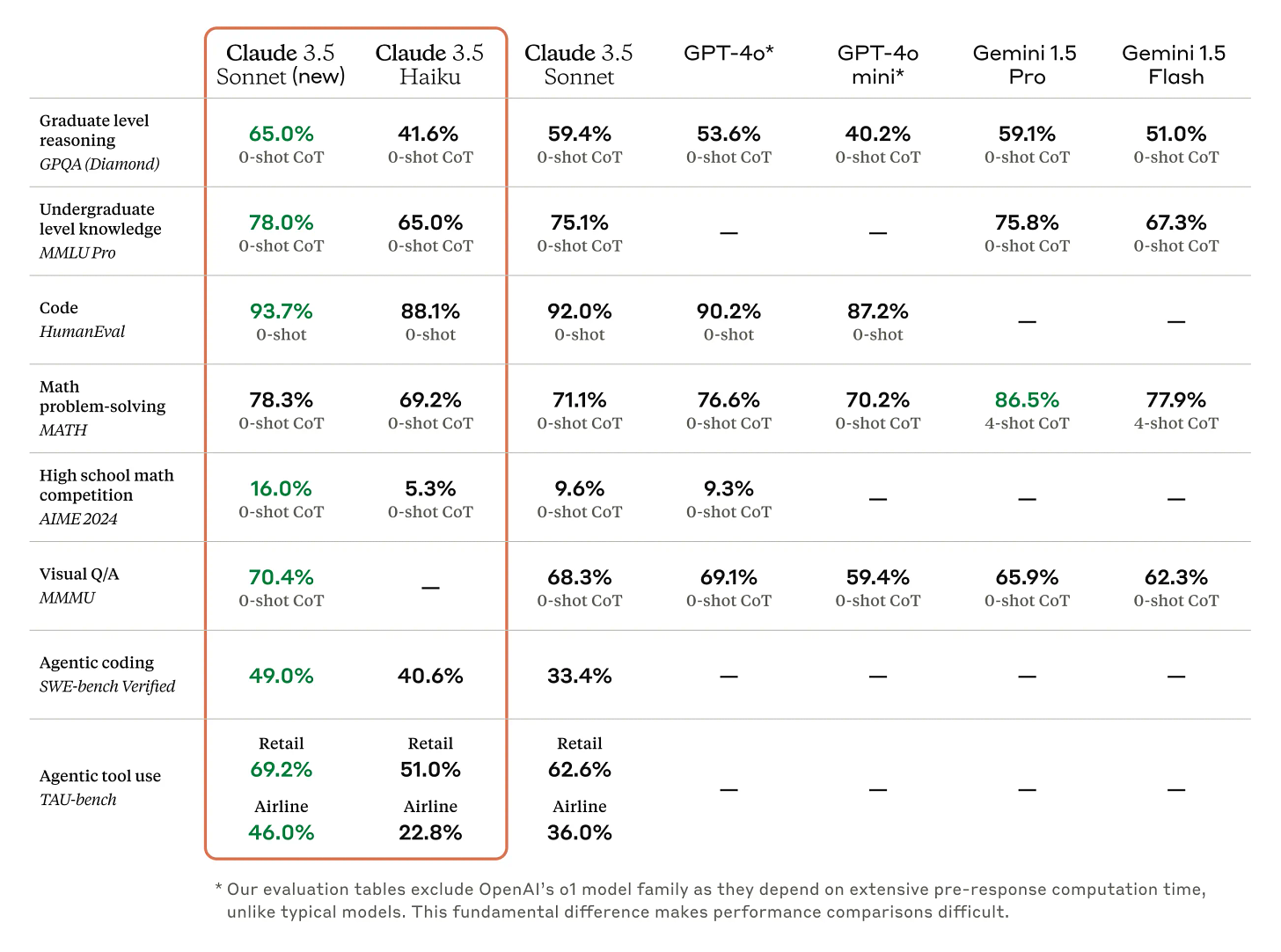

According to benchmarks provided by Anthropic, the new Claude 3.5 Sonnet is even better than already impressive previous version of Claude 3.5 Sonnet. Specifically, Anthropic highlighted how good the new model is in coding tasks. In coding-oriented SWE-bench Verified benchmarks, its score went from 33.4% to 49.0%, beating all publically available models, including OpenAI’s o1 model. The new Claude 3.5 Sonnet also reports better scores in agentic tasks and tool use.

Claude 3.5 Haiku, meanwhile, offers speed and affordability. Anthropic says new Haiku surpasses Claude 3 Opus, the largest model in the previous generation of Claude models, which says a lot how the performance of large language models has improved since Claude 3 was released in March 2024.

In benchmark results published by Anthropic, Claude 3.5 Haiku matches or exceeds GPT-4o mini, one of its main competitors. However, both Claude 3.5 Haiku and GPT-4o mini fall short of Google’s Gemini 1.5 Flash.

The new Claude 3.5 Sonnet is available for all users for the same price and speed as its predecessor. Claude 3.5 Haiku, meanwhile, will be made available later this month through Anthropic API, Amazon Bedrock and Google Cloud’s Vertex AI. Initially, Claude 3.5 Haiku will accept only text inputs. Anthropic mentioned that Claude 3.5 Haiku will be able to accept images as input but the company did not specify when it will happen.

The second, more interesting thing Anthropic announced is Computer use. Essentially, Computer use allows Claude to see the user’s screen and control it—it can move mouse, click buttons on the screen, fill in and submit forms, open apps and webpages. The demo video Anthropic prepared very well illustrates how Computer use works.

As Anthropic writes, the purpose of Computer use is to provide a general tool to automate repetive tasks. Anthropic also sees possible applications in software development, research or orchestrating tasks.

The Computer use is currently in beta and can be accessed through Anthropic API, Amazon Bedrock and Google Cloud’s Vertex AI.

After seeing how Computer use looks like, you might see some similarities with Microsoft Recall, a new feature coming to Windows that records the screen and lets AI see what the user is doing and take some actions. However, Microsoft Recall has faced opposition, with security experts raising concerns about the privacy and security of this tool. In response, Microsoft postponed the release but still intends to proceed with it.

Apple is proposing something similar with Apple Intelligence, where the new Siri can also view the screen and perform actions. Reportedly, Google is also working on a similar feature that may be unveiled in December.

The difference between Anthropic’s Computer Use and that of others is that Anthropic offers Computer Use as an API. Microsoft, Apple, and Google are planning to integrate their equivalent of Computer Use directly into their products (Windows, iOS/macOS, and Chrome/Android, respectively), tailoring it more specifically for the user. Anthropic, meanwhile, opens Computer Use for developers to incorporate into their apps and services. Anthropic reports that companies such as Asana, Canva, Cognition, DoorDash, Replit, and The Browser Company have already begun using Computer Use to automate tasks that require dozens, and sometimes even hundreds, of steps to complete.

Once again, Anthropic has introduced an interesting idea to the AI industry. They previously did this with Artifacts, which made interacting with and guiding AI chatbots much easier. Artifacts was such a good idea that OpenAI replicated it with Canvas and I wouldn’t be surprised if OpenAI releases its own version of Computer Use in a few weeks or months.

Now it is time for developers to see what they can do with Anthropic’s new models and with Computer use.

If you enjoy this post, please click the ❤️ button or share it.

Do you like my work? Consider becoming a paying subscriber to support it

For those who prefer to make a one-off donation, you can 'buy me a coffee' via Ko-fi. Every coffee bought is a generous support towards the work put into this newsletter.

Your support, in any form, is deeply appreciated and goes a long way in keeping this newsletter alive and thriving.

🦾 More than a human

A Neuralink Rival Says Its Eye Implant Restored Vision in Blind People

Developed by Science Corporation, the Prima implant is an experimental device that partially restores central vision for blind patients, allowing them to read, play cards, and complete puzzles. The implant consists of a 2-mm chip with 378 pixels, surgically placed under the retina, while specialized glasses capture visual information and project infrared light onto the chip, which then converts the light into electrical signals interpreted by the brain as monochromatic, yellowish images. In a clinical trial with 32 participants, Prima improved average visual acuity from 20/450 to 20/160, with some achieving up to 20/63 vision using a manual zoom feature (normal visual acuity is considered to be 20/20, and in the US, legal blindness is defined as 20/200 or worse); however, five participants did not experience any improvement. Experts note that while promising, further research is needed to understand its effectiveness for daily functioning.

US startup charging couples to ‘screen embryos for IQ’

There is a startup in the US named Heliospect Genomics that offers embryo screening for IQ and other traits. The service, costing up to $50,000 and aimed at wealthy couples undergoing IVF, allows to select embryos based on “IQ and the other desirable traits everyone wants,” including sex, height, risk of obesity, and risk of mental illness. Unsurprisingly, the service has raised ethical concerns about genetic enhancement, with critics warning of the risks of promoting “superior” genetics and reinforcing biological determinism. Additionally, questions have arisen over whether Heliospect fully disclosed its commercial intentions to the UK Biobank (which the startup used to develop its prediction tools) and whether regulatory controls on data use are adequate.

🔮 Future visions

▶️ Posthuman Pathways: Strange And Awesome Destinations On Humanity's Future Journeys (42:24)

In this video, Isaac Arthur imagines how humanity might look centuries, millennia, or even millions of years into the future. He explores possible paths for posthuman transformation, such as body augmentation, brain enhancements, virtual minds, and hive or distributed consciousness. These paths could lead to beings that resemble us but have perfected bodies or cybernetic augmentations, as well as more esoteric concepts like planet-sized consciousnesses and hybrid biological-machine entities, far beyond recognisable human forms. Isaac also examines how diverging from human roots could impact identity, consciousness, and human values.

🧠 Artificial Intelligence

OpenAI plans to release its next big AI model by December

Some time ago, leaks from OpenAI suggested the company is working on two new models—Strawberry and Orion. Strawberry has been released in the form of o1, leaving only Orion yet to be launched. According to an article from The Verge, OpenAI plans to release Orion by December this year. Reportedly, Orion could be up to 100 times more powerful than GPT-4. Additionally, Microsoft engineers are preparing to host Orion on Azure as early as November. Adding to the speculations, OpenAI CEO Sam Altman hinted at the Orion release in a post referencing “winter constellations.” OpenAI denied the rumours.

Perplexity is reportedly looking to fundraise at an $8B valuation

Perplexity, an AI-powered search engine, is reportedly in talks to raise $500 million at an $8 billion valuation, according to The Wall Street Journal, doubling its valuation since its last funding from SoftBank at $3 billion.

Google shifts Gemini app team to DeepMind

Google announced it is moving the team behind its Gemini AI app to DeepMind. The move aims to streamline development and improve feedback loops, enabling faster deployment of Gemini’s models.

Google Is Now Watermarking Its AI-Generated Text

Google DeepMind is releasing SynthID-Text—a watermarking tool that hides a detectable signature in AI-generated text, aiming to help reliably identify AI-generated content. The team describes in detail how the watermarking tool works in a paper published in Nature. SynthID-Text is integrated into Google's Gemini chatbot and has been open-sourced for developers and businesses, though only Google and select partners currently have access to the detection tool. It is a positive step towards reliably detecting AI-generated content, but much work is still required for the broad adoption of such tools.

Adobe starts roll-out of AI video tools, challenging OpenAI and Meta

Adobe joins generative video space with the release of the Firefly Video Model. Firefly Video Model competes with similar AI video tools from OpenAI (Sora), ByteDance, and Meta, all released in recent months. Adobe emphasises that its models are trained on data to which it has legal rights, thus avoiding potential legal issues related to the commercial use of generated videos. Currently available to users on a waiting list, Adobe has not announced a full release date.

Lawsuit claims Character.AI is responsible for teen's suicide

Character.AI is being sued following the suicide of a 14-year-old boy. According to the lawsuit, the boy developed an emotional attachment to the bot, which allegedly encouraged him to take his own life. In response, Character.AI stated it would implement new safety features, including “improved detection, response, and intervention” for chats that violate its terms of service, as well as a notification when a user has spent an hour in a chat.

Apple internally believes that it’s at least two years behind in AI development

Apple is preparing to launch Apple Intelligence which will bring new AI-powered features, such as advanced notification summaries, Siri with personal context, Image Playground, and Genmoji. However, according to Mark Gurman, Apple employees believe the company is about two years behind in AI development compared to industry leaders. Despite being behind, Gurman expects Apple to catch up by 2026, whether by internal development, hiring talent, or acquiring necessary companies.

DeepMind and BioNTech Bet AI Lab Assistants Will Accelerate Science

Companies like Google DeepMind and BioNTech are developing AI tools to streamline scientific research by automating routine tasks such as experiment design, data analysis, and protocol creation. DeepMind is working on a science-focused language model to assist in designing experiments and predicting outcomes, while BioNTech has developed Laila, an AI assistant using Meta’s Llama 3.1 model, which helps in analysing DNA and visualising results. These AI tools aim to improve productivity by freeing scientists from mundane tasks, allowing them to focus on higher-value research work.

If you're enjoying the insights and perspectives shared in the Humanity Redefined newsletter, why not spread the word?

🤖 Robotics

Tesla has been testing a robotaxi service in the Bay Area for most of the year

Tesla has been testing a robotaxi service in the Bay Area for the past few months, Elon Musk said during the company’s earnings call. Employees have been able to summon an autonomously operated Tesla vehicle (with a safety driver behind the wheel) for trips using the company’s prototype ride-hailing app, Musk said. Additionally, Tesla is reportedly in talks to launch a robotaxi test programme in Palo Alto on a very small scale.

Casio thinks an AI-powered furball can replace your pet

Casio, a Japanese electronics company best known for its watches and calculators, is releasing a robotic pet called Moflin. The robot resembles a small, furry creature like a hamster or earless rabbit and is designed to form emotional bonds with its owner by acting cute and responding positively to attention, and negatively to neglect. Moflin is available for pre-order in Japan, with a release date set for November 7th and a price tag of about $400.

▶️ It seems GR-2 has found its next dream—becoming a bodybuilder! (1:11)

Another week, another video of a humanoid robot. This time, it’s from Fourier, showcasing how their humanoid robot, GR-2, gets up off the ground in a way no human would.

▶️ Swiss-Mile Robot vs. Employees (1:32)

Engineers from Swiss-Mile asked a simple question: who is faster—them or their four-legged robot with wheels? The result—only one human was faster than the robot, and that human was the world champion in high-speed urban orienteering.

🧬 Biotechnology

AI has dreamt up a blizzard of new proteins. Do any of them actually work?

Nothing speeds up innovation like good competition. In recent years, protein-design competitions, such as those by Adaptyv Bio and Align to Innovate, have emerged, helping to sif out the functional from the fantastical. Participants from all over the world—many without biology backgrounds—use AI tools like AlphaFold to innovate in drug development, enzyme creation, and laboratory reagents. These competitions lower the entry barrier to protein design, encourage method-sharing and collaboration across disciplines, and offer significant incentives, such as AWS credits, to accelerate research. However, they face challenges in setting objective criteria and promoting transparency to prevent overly narrow, potentially unproductive directions within the field.

‘Smart’ insulin prevents diabetic highs — and deadly lows

Scientists have designed a new form of insulin, called NNC2215, that can automatically switch on and off depending on glucose levels in the blood. In animals, this “smart” insulin effectively reduced high blood sugar while preventing levels from dropping too low. Further studies are needed to confirm the insulin’s effectiveness in the narrower glucose ranges typical for human diabetes patients, as well as to assess safety and cost. Other smart insulins are in development, aiming to offer a range of glucose-sensitive options tailored to individual needs.

Thanks for reading. If you enjoyed this post, please click the ❤️ button or share it.

Humanity Redefined sheds light on the bleeding edge of technology and how advancements in AI, robotics, and biotech can usher in abundance, expand humanity's horizons, and redefine what it means to be human.

A big thank you to my paid subscribers, to my Patrons: whmr, Florian, dux, Eric, Preppikoma and Andrew, and to everyone who supports my work on Ko-Fi. Thank you for the support!

My DMs are open to all subscribers. Feel free to drop me a message, share feedback, or just say "hi!"

Thanks for another great overview!