Top US AI companies commit at White House to AI safety - H+ Weekly - Issue #425

This week - Sergei Brin is back at Google to help out with AI; Google's Gemini AI might be out this year; Glorbo hoax tricks news bot; a BCI that unfolds in the ear; and more!

The quest to regulate AI continues. While the EU is moving ahead with AI Act and China is introducing its own AI regulations, the US government is still discussing how to approach the topic of regulating the AI industry.

This week, the US Senate committee invited Anthropic co-founder Dario Amodei, UC Berkeley’s Stuart Russell and AI researcher Yoshua Bengio to share their opinions and expertise. The entire hearing is available on YouTube. If you don’t have 2 hours to spare, TechCrunch has a good summary of the hearing.

“We must be clear-eyed and vigilant about the threats emerging technologies can pose,” President Biden said, adding that the companies have a “fundamental obligation” to ensure their products are safe.

“Social media has shown us the harm that powerful technology can do without the right safeguards in place,” Biden added. “These commitments are a promising step, but we have a lot more work to do together.”

What did the leading US AI companies commit to, then? In short, the companies have agreed to make sure their products are safe and trustworthy before releasing them to the public.

The agreement calls for internal and external security testing of AI systems before their release. These tests will be carried out by independent experts. The companies agreed to share information across the industry and with governments, civil society, and academia on managing AI risks. This includes best practices for safety, information on attempts to circumvent safeguards, and technical collaboration. No further details have been disclosed on how would that look in practice.

The companies commit to investing in cybersecurity and insider threat safeguards to protect proprietary and unreleased model weights. They also commit to facilitating third-party discovery and reporting of vulnerabilities in their AI systems.

To ensure the AI systems are trustworthy, the companies agreed to watermark the content generated by their systems as such and to publicly report their AI systems’ capabilities, limitations, and areas of appropriate and inappropriate use. This report will cover both security risks and societal risks, such as the effects on fairness and bias. On top of that, the companies will also prioritise research on the societal risks that AI systems can pose, including avoiding harmful bias and discrimination and protecting privacy.

The companies commit to develop and deploy advanced AI systems to help address society’s greatest challenges such as cancer or climate change.

The White House also reiterated its commitment to working with its allies and partners to establish a strong international framework to govern the development and use of AI.

There is also no mention of any concrete structures or processes to ensure the agreement is upheld and followed.

It’s worth noting that those commitments are not legally binding, but may create a stopgap while more comprehensive regulations are put in place. But from those voluntary commitments, we can see where the industry, academia and the government are converging as those commitments echo what we have heard during the first US Senate hearing and what companies such as OpenAI publicly propose for AI regulations in the US.

What do you think about those commitments? Share your thoughts in the comments.

From H+ Weekly

A couple of announcements before we jump into this week’s news roundup.

I promised to rename the newsletter by the end of June. I’ve run into problems with the name I have chosen and I’ve had to restart the entire process. My aim is to complete the name change by September.

In other news - H+ Weekly has now a Discord server! I want to create a community of people interested in the bleeding edge of technology and how advancements in AI, robotics, and biotech can usher in abundance, expand humanity's horizons, and redefine what it means to be human. If that sounds interesting to you, then feel free to join!

Next, I’m organising the first H+ Weekly Hangout! It will take place on Google Meet on Saturday, August 5th at 6 pm UK time. If you are interested in joining, put the date into your calendar and let me know by replying to this email. The link to the hangout is here. I’m looking forward to meeting you and discussing AI, robotics, biotech and transhumanism.

And the last announcement - I opened a page on Ko-Fi where you can support my work (and fuel my growing addiction to caffeine and bubble tea) through one-off donations.

Becoming a paid subscriber now would be the best way to support the newsletter.

If you enjoy and find value in what I write about, feel free to hit the like button and share your thoughts in the comments. Share the newsletter with someone who will enjoy it, too. That will help the newsletter grow and reach more people.

🦾 More than a human

A New Twist on Brain-Computer Interfaces

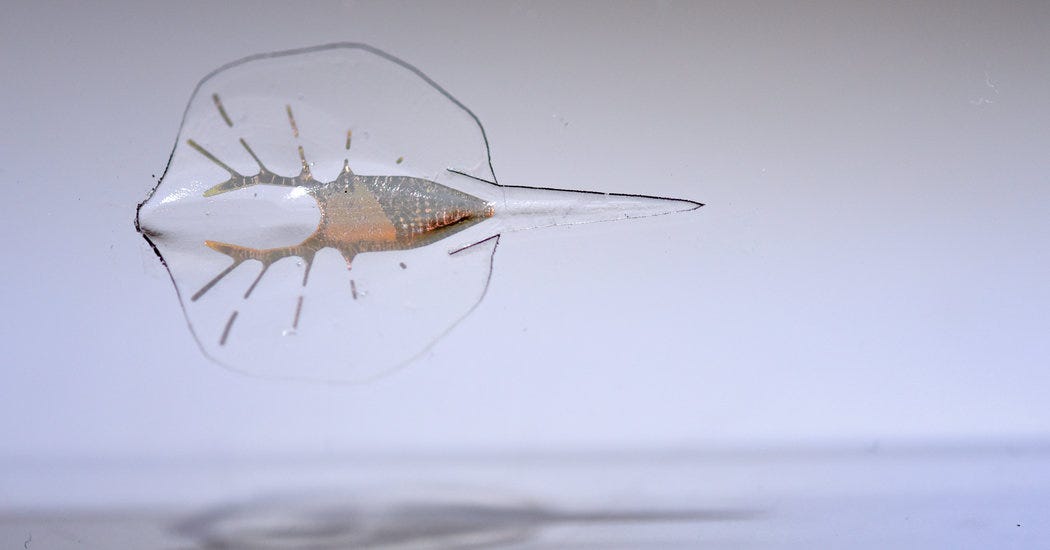

Researchers from Tsinghua University made a brain-computer interface that does not go onto the head but into the ear. The device, named SpiralE, unfolds itself after being placed in the ear canal and then starts measuring brain activity with EEG. According to the research paper, the device is effective at reading brain activity and does not affect the hearing of its users.

With inactivated gene, mice age faster but live longer

A gene called Myc is among the most important drivers of cancer in both mice and humans. When scientists knocked out that gene in mice, they discovered the mice started to rapidly age but their lifespan increased by 20%. “Because Myc is expressed in so many types of cancer, if you had a drug that could target this gene, it could be broadly applicable,” says Edward V. Prochownik, the lead author of the research. “But our study suggests that you have to be really careful because of potential side effects of premature aging, particularly if you’re developing this kind of drug to treat cancer in children.”

'Electronic skin' from bio-friendly materials can track human vital signs with ultrahigh precision

Researchers from the UK created an “electronic skin” made from graphene capsules made up of a solid seaweed/graphene gel layer surrounding a liquid graphene ink core. These capsules then can be used to make biodegradable skin-on devices for measuring vital biomechanical signs with high precision and in real time.

🧠 Artificial Intelligence

Sergey Brin Is Back in the Trenches at Google

The Wall Street Journal reports that Sergei Brin, co-founder of Google who left the company in 2019, is back and is working closely with the team working on Google’s new AI model - Gemini, which might be released by the end of this year, according to the WSJ report.

Warcraft fans trick AI article bot with Glorbo hoax

World of Warcraft players suspected a gaming news website was scraping their subreddit to automatically generate news articles about what is going on in WoW. So they laid a trap. One of those players made a story about a new character coming to the game named Glorbo. The suspected bot picked up the fake story and wrote an entire article about it. The bot (most likely powered by some kind of LLM) even directly quoted the player mocking the news website.

Research to merge human brain cells with AI secures national defence funding

Australia’s National Intelligence and Security Discovery Research Grants Program (Australia’s equivalent of DARPA) has granted $600,000 AUD (around $400,000 USD) to Monash University and Cortical Labs to develop AI chips powered by real neurons. The project involves growing around 800,000 brain cells living in a dish, which are then “taught” to perform goal-directed tasks. Last year, Cordical Labs has proven that growing neurons inside a chip to power AI works by teaching neurons to play Pong.

Face/Off: Changing the face of movies with deepfakes

Deepfakes can not only spread misinformation but also gaslit people. In this paper, researchers showed a group of 436 people fake movie remakes (such as Will Smith staring as Neo in The Matrix). They discovered an average false memory rate of 49%, with many participants remembering the fake remake as better than the original film. They also found that deepfaked videos were not more or less effective than fake text.

China’s AI Regulations and How They Get Made

If you have ever wanted to know how the Chinese government got where it is now regarding AI regulations, then this comprehensive paper will give you everything you need to know. The paper breaks down the regulations into their component parts—the terminology, key concepts, and specific requirements—and then traces those components to their roots, revealing how Chinese academics, bureaucrats, and journalists shaped the regulations, and what the future trajectory of Chinese AI governance could look like.

🤖 Robotics

Shape-Shifting, Self-Healing Machines Are Among Us

Is there a future where if you drop your phone, it can repair itself? According to researchers studying self-healing electronics, yes, that future is possible. Those devices can also be flexible, shape-shifting and even better for the environment. Researchers have already successfully developed healable transistors, capacitors, and other electronic components using these materials and are now experimenting with more complex devices and designs.

It’s Totally Fine for Humanoid Robots to Fall Down

We seem to like watching robots falling over. Every time a new video of a humanoid robot falling down shows up on the internet, it gets millions of views. In this article, IEEE Spectrum speaks with people working at Agility Robotics and Boston Dynamics about how engineers view falls as a natural part of the learning process and are continuously improving robot capabilities and safety not only for the robot but also for people around it.

Differentiable Optimal Control for Retargeting Motions onto Legged Robots

Robot’s movement is, well, robotic. That’s good enough for many applications but not good enough for making robots feel alive. Researchers at Disney and ETH Zurich present a way of translating animated movements into a real robot. This approach allows animators to define how a robot should move in an animated environment and then seamlessly transfer those movements into a physical robot. With this technology, robots can exhibit more natural and expressive behaviours, something that Disney could use in their parks to improve animatronic experiences.

🧬 Biotechnology

CRISPR Crops Are Here

Could CRISPR feed the world? Proponents of CRISPR foods say yes, CRISPR can do that through crops and animals genetically edited to be heartier, thrive in more places, require fewer pesticides and fertilizers and water, survive longer on shelves, or make tastier or more nutritious produce. And there are already CRISPR-modified foods available for sale, such as mustard greens edited to taste less bitter, tomatoes edited to contain higher amounts of GABA (γ-aminobutyric acid) or cows modified to be short-haired and more heat-resistant.

H+ Weekly sheds light on the bleeding edge of technology and how advancements in AI, robotics, and biotech can usher in abundance, expand humanity's horizons, and redefine what it means to be human.

A big thank you to my paid subscribers, to my Patrons: whmr, Florian, dux, Eric, Preppikoma and Andrew, and to everyone who bought me a coffee on Ko-Fi. Thank you for the support!

You can follow H+ Weekly on Twitter and on LinkedIn.

Thank you for reading and see you next Friday!

Hey Conrad, I'm not sure these commitments are very durable. They seem more on the order of cosmetics or optics- a form of AI virtue signalling. Even if the companies (or some fraction of them) act in good faith and make an effort at implementation, the technical problems are formidable, the company bottom line in competitiveness vs. rivals will always have the veto power and, most ominously, the current state of the art of LLMs is such that for the first time in computer science history, we have created something of such complexity that NO ONE understands what is going on ,"under the hood" of these things. So NO ONE understands what kind of emergent behavior they may display. So finally, color me skeptical.