How AI elevated Nvidia to $1T club - H+ Weekly - Issue #417

This week - the conversations about AI being an existential threat take pace; new emerging fields in biology; how tiny transfomer created addition; and more!

There is an old business saying that in a gold rush, the ones who sell shovels make the most money.

We are in the AI gold rush. Everyone is asking not if but how to incorporate AI in their businesses. We hear about the big players - Microsoft, Google and OpenAI - in the news announcing new products or services.

These new products and services require enormous amounts of computing power to train the AI models and then to run them. Microsoft, Google and Amazon are investing billions of dollars into their data centres, constantly upgrading servers with the latest and fastest chips available.

Here’s where Nvidia - the shovel seller in the gold rush - comes into the picture.

Nvidia’s story starts 30 years ago, in 1993, in the computer graphics industry.

Both computer graphics and machine learning utilize the same mathematical concept: matrices. The entire pipeline for rendering 3D scenes is based on matrix operations, and graphic cards are highly optimized to efficiently perform these operations.

In pursuit of greater computing power, Nvidia engineers implemented parallel computing in their cards and gradually took over more of the rendering pipeline from the CPU. This approach culminated in 1999 with the release of Nvidia 256 - the first GPU (Graphics Processing Unit), which could handle the entire rendering pipeline.

In 2001, Nvidia launched GeForce3 - the first programmable GPU. GeForce3 was Nvidia’s first card to have shaders - small programs controlling the appearance of objects by manipulating their colours, lighting, and other visual properties in the scene.

Shaders can transform every pixel and every vertex in the scene. To be effective, GPU runs shaders in parallel, thousands of them simultaneously. Researchers soon recognized the potential of GPUs to accelerate programs performing complex calculations. In 2004, two Korean researchers have shown that it is possible to run neural networks on GPUs, getting 20x improvement over CPU.

Nvidia noticed the opportunity to sell its products to a new group of customers. In 2006, alongside the GeForce 8 series of graphic cards, Nvidia introduced CUDA (Compute Unified Device Architecture). CUDA opened the parallel data processing pipeline to any kind of computing, not just for graphics processing.

Now, complex calculations could be offloaded to GPUs, which are better suited for running massive parallel operations.

To give you a better understanding of how much more GPUs are optimised for parallel computing, in 2006, when CUDA was released, the best CPU on the market, Intel Core 2 Duo E6700, had 2 cores. GeForce 8800 GTX, Nvidia’s first card with CUDA, had 128 cores. Today, the best consumer CPUs on the market (both from Intel and AMD) have 16 cores. Nvidia’s top consumer GPU, GeForce RTX 4090, has 16,384 cores.

A big breakthrough for AI came in 2012 when Alex Krizhevsky, Ilya Sutskever (now co-founder and chief scientist at OpenAI) and Geoffrey Hinton (one of the “Godfathers of Deep Learning”) published their historic paper “ImageNet Classification with Deep Convolutional Neural Networks”. The trio leveraged the inexpensive parallel computing power enabled by GPUs to create a state-of-the-art image recognition model, kickstarting the deep learning revolution of the 2010s. They did that with two Nvidia GTX 580 graphic cards.

Since then, Nvidia's products have been at the forefront of AI research.

Nvidia provides GPUs to AI researchers so they can build models based on their hardware and software. TensorFlow and PyTorch, the most popular deep learning frameworks, primarily use CUDA. Whenever someone asks for recommendations on components for their machine learning workstation, the answer is almost always to use Nvidia products.

Nvidia understands the power of being the default option and the dominant platform. They also know how to capitalize on explosive demands in computing power. Whether it was the deep learning revolution of the 2010s or the cryptocurrency bubbles of 2017-2018 and 2021-2022, Nvidia was there.

The current generative AI revolution has created another surge in demand for computing power, and Nvidia is well-positioned to meet these demands. This surge in demand for AI chips propelled Nvidia to briefly be valued a $1T, making it the sixth company to achieve this milestone, alongside Apple, Microsoft, Saudi Aramco, Alphabet, and Google.

AI is here to stay. Time will tell if Nvidia can solidify its place in the $1T Club or if will it fall like Cisco did after the dot-com bubble.

From H+ Weekly

Humanoid robots: Machines built in our image

The dream of creating humanoid robots - machines built in our image - is not new. Long before the word "robot" was even coined, people told stories of human-like machines. In ancient Greece, Hephaestus, the god of blacksmiths, was said to have created several different humanoid automata in various myths. In ancient China, a story from the 3rd century BCE…

🦾 More than a human

Zapping the brain during sleep helps memories form

Brain stimulation during sleep appears to help with the formation of long-term memories — suggesting a new way to treat people with Alzheimer’s and other diseases affecting memory.

🧠 Artificial Intelligence

Statement on AI Risk

The conversation about AI being an existential risk is taking pace. Center for AI Safety published a statement saying that AI should be a “global priority alongside other societal-scale risks such as pandemics and nuclear war”. The statement has been signed by hundreds of people, including Demis Hassabis, Sam Altman, Bill Gates and other AI researchers and notable figures.

An early warning system for novel AI risks

DeepMind, in cooperation with other companies and institutions such as OpenAI, Anthropic, the University of Cambridge, the University of Oxford, the University of Toronto and Université de Montréal, has created a framework for evaluating novel threats that might come from advanced AI systems. With this framework, AI developers will be able to find ahead of time if their model has any “dangerous capabilities” and to what extent the model is prone to applying its capabilities to cause harm. The paper describing the framework in detail can be found on arXiv.

UK Prime Minister races to tighten rules for AI amid fears of existential risk

Rishi Sunak and his officials are looking at ways to tighten the UK’s regulation of AI, as industry figures warn the government’s AI white paper, published just two months ago, is already out of date. Sunak is pushing allies to formulate an international agreement on how to develop AI capabilities, which could even lead to the creation of a new global regulator. Meanwhile Conservative and Labour MPs are calling on the prime minister to pass a separate bill that could create the UK’s first AI-focused watchdog.

Baidu’s $145M AI fund signals China’s push for AI self-reliance

In order to grow the Chinese AI startup scene, Baidu’s co-founder and CEO Robin Li announced the launch of a billion yuan ($145 million) fund to back generative AI companies. The fund, which TechCrunch compares to OpenAI Startup Fund, will invest up to 10 million yuan (approximately $1.4 million) in an early-stage project. Baidu can also use this fund to grow the adoption of Ernie Bot, their response to ChatGPT. “American developers are building new applications based on ChatGPT or other language models. In China, there will be an increasing number of developers building AI applications using Ernie as their foundation,” Li said.

How a tiny transformer created addition

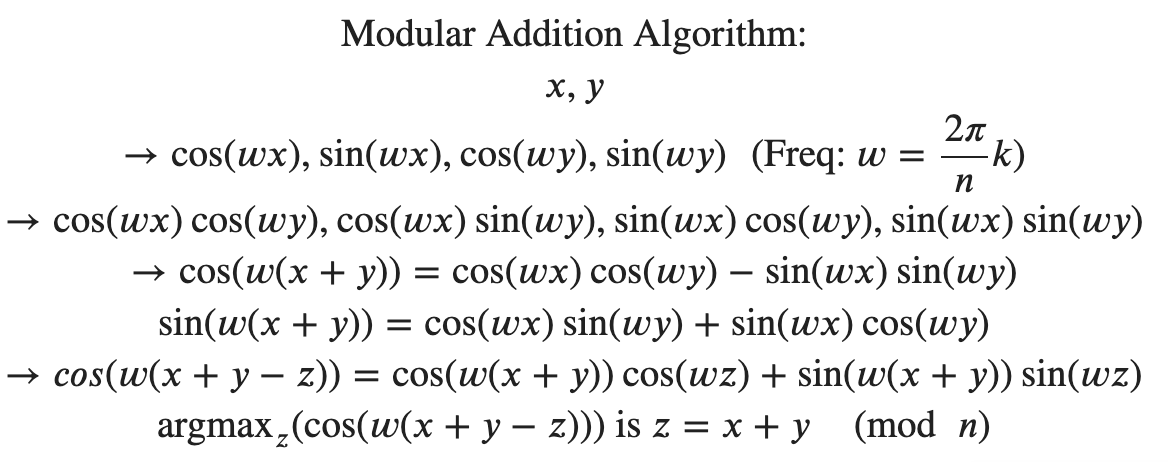

Neel Nanda, a researcher at DeepMind, spent weeks trying to understand how a tiny transformer was doing modular addition of two numbers. It is a simple operation which we use all the time, for example when we add hours on the clock (23:00 plus 5 hours is 4:00, not 27:00). The image above shows the algorithm this tiny transformer created to perform modular addition. As Robert Miles tweeted, this is “one of the only times in history someone has understood how a transformer works”. Now try to imagine what kind of alien logic is happening inside ChatGPT (which is built using transformers) next time you use it.

🤖 Robotics

Robotics investments top $1.63B in April 2023

The Robot Report informs that in April alone, $1.63 billion was invested in robotics companies. These investments bring the total robotics funding for 2023 to approximately $3.3 billion - $521 million in January, $620 million in February and $526 million in March, and now $1.63 billion in April.

Barkour: Benchmarking Animal-level Agility with Quadruped Robots

Boston Dynamics famously showed what Atlas, their humanoid robot, can do using parkour. Researchers from DeepMind propose to take a similar approach to test how agile quadruped robots are by using Barkour - an obstacle course to test the agility of legged robots. Inspired by dog agility competition, Barkour rates the robots based on how well and quickly they completed a course full of diverse obstacles. Researchers hope that Barkour will help robots achieve animal-level agility.

🧬 Biotechnology

Synthetic Morphology Lets Scientists Create New Life-Forms

Imagine being able to shape living organisms however we want and create completely new life forms not only on the level of DNA (which is what synthetic biology does) but on higher levels - groups of cells, tissues and higher. That’s what the emerging discipline of synthetic morphology promises. The goal of synthetic morphology is to understand more about the rules of natural morphogenesis (the development of biological form) and to make useful structures and devices by engineering living tissue for applications in medicine, robotics, and beyond.

Quantum Biology Could Revolutionize Our Understanding of How Life Works

Over the past few decades, scientists have made incredible progress in understanding and manipulating biological systems at increasingly small scales, from protein folding to genetic engineering. Eventually, as you go smaller and smaller, you will hit the scale where quantum effects become more visible. At this scale and the intersection of biology and quantum mechanics, the field of quantum biology emerges. This new field could help us better understand how life works, how to control it, and learn with nature to build better quantum technologies.

H+ Weekly is a free, weekly newsletter with the latest news and articles about AI, robotics, biotech and technologies that blur the line between humans and machines, delivered to your inbox every Friday.

Subscribe to H+ Weekly to support the newsletter under a Guardian/Wikipedia-style tipping model (everyone gets the same content but those who can pay for a subscription will support access for all).

A big thank you to my paid subscribers and to my Patreons: whmr, Florian, dux, Eric and Andrew. Thank you for the support!

You can follow H+ Weekly on Twitter and on LinkedIn.

Thank you for reading and see you next Friday!