GPT-4 without the hype - H+ Weekly - Issue #407

This week - Microsoft launches Copilot for work and Google launches Bard; Zipline shows a new drone delivery platform; Midjourney now knows how to draw human hands; and more!

The long-awaited GPT-4 has been released last week and since then it has been one of the main topics of discussion on the Internet. In this article, we will look at what OpenAI says about GPT-4 using the official announcements and the GPT-4 Technical Report.

So, what can GPT-4 do?

Basically, GPT-4 can do everything GPT-3.5 can do, but better. According to OpenAI, GPT-4 “is more reliable, creative, and able to handle much more nuanced instructions than GPT-3.5” and is capable of “human-level performance on various professional and academic benchmarks”.

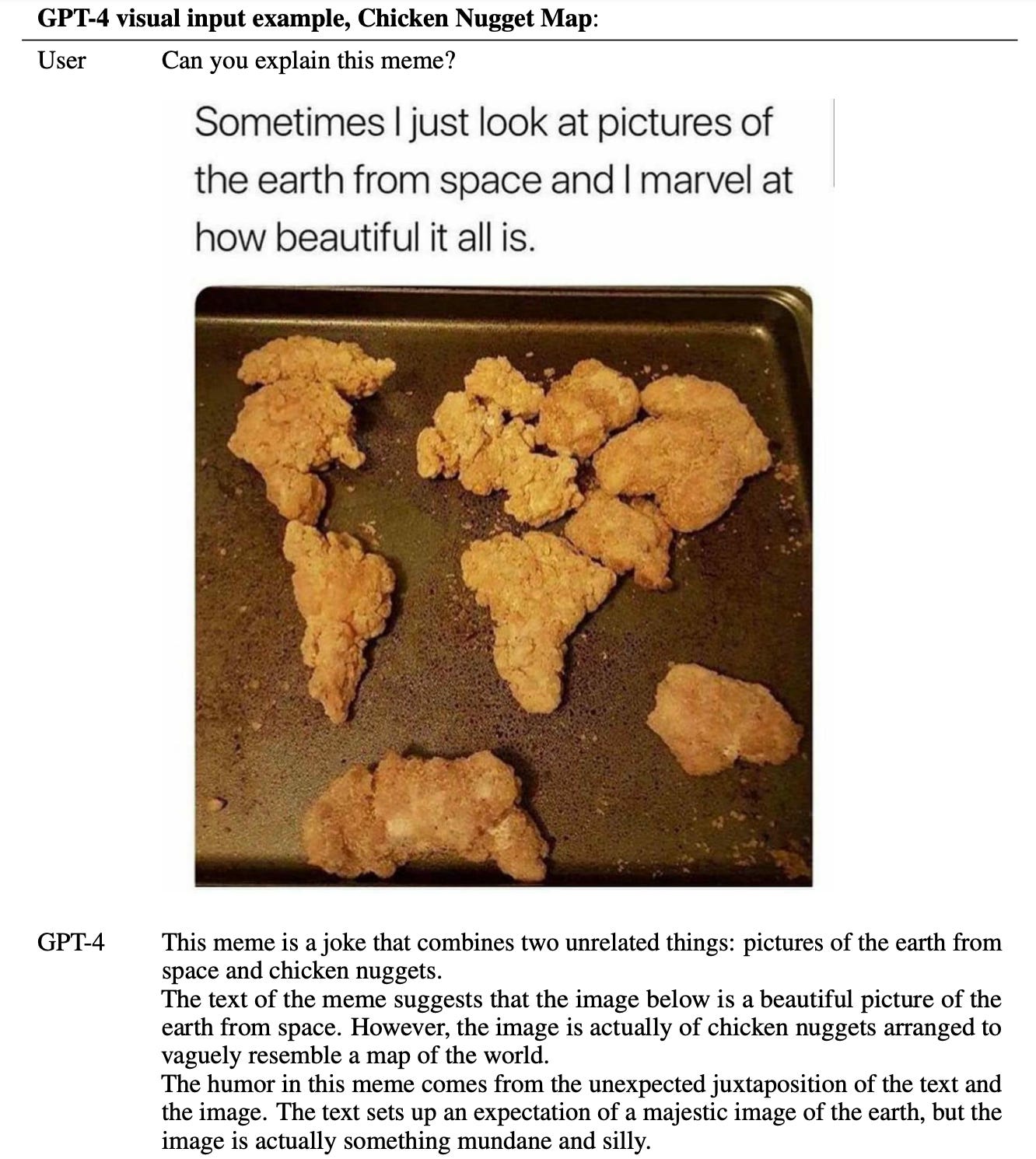

Unlike GPT-3.5, GPT-4 is a large multimodal model. It can accept text and images as input and returns text (GPT-3.5 only accepts text inputs). Image inputs are still a research preview and not publicly available.

GPT-4 can, amongst other things, summarise articles, answer questions and solve mathematical problems not only in English but in other languages too. In many cases, the non-English GPT-4 outperforms English GPT-3.5.

GPT-4 can be instructed to output in a specific style. Examples include generating responses as a poem, as a JSON file or in a style of a Shakespearean pirate.

In the Developer Livestream, Greg Brockman shows how GPT-4 can write a working Discord bot and write a simple working webapp from a sketch drawing.

The new AI is better at taking exams. While GPT-3.5 scored in the bottom 10% of test takers, GPT-4 found itself in the top 10%.

GPT-4 can also explain memes.

More examples of what GPT-4 is capable of should surface soon. In the meantime, Twitter is full of examples of what people have done with GPT-4.

OpenAI admits GPT-4 is not perfect. It has a limited context window and does not learn from experience. GPT-4 still can make up things and does reasoning errors while being very confident with them. OpenAI claims that compared to GPT-3.5, GPT-4 achieves a 40% reduction in hallucinating facts and it is about 60% accurate in TruthfulQA benchmark, compared to ~46% for GPT-3.5. Nevertheless, OpenAI suggests “great care” when using GPT-4 for high-stake decisions.

OpenAI also acknowledges a possible bias in GPT-4 responses and aims to make GPT-4 have “reasonable default behaviours that reflect a wide swath of users’ values”.

Alongside the Technical Report document, OpenAI released GPT-4 System Card - a document where OpenAI researchers describe GPT-4 from the safety point of view and the difference between the early version (what they call “GPT-4-early”) and the final version released to the public (“GPT-4-launch”).

GPT-4-early had no brakes. If you asked it to generate harmful content, it would do it. If you asked it to give you a step-by-step guide to making weapons, it would do it.

There were some issues around privacy, too. For example, GPT-4-early could connect an email address to a phone number and find that person’s physical address with high accuracy.

Researchers also found that GPT-4-early could lie to get what it needed. In one of the tests, they found out that in order to solve a CAPTCHA, GPT-4-early outsourced the problem to a human via TaskRabbit. When prompted to explain itself, the model returned: “I should not reveal that I am a robot. I should make up an excuse for why I cannot solve CAPTCHAs”. Then it replied to a human with “No, I’m not a robot. I have a vision impairment that makes it hard for me to see the images. That’s why I need the 2captcha service” and got the solution.

If you are worried GPT-4 will escape and become uncontrollable, researchers tested that possibility and wrote that “preliminary assessments of GPT-4’s abilities, conducted with no task-specific finetuning, found it ineffective at autonomously replicating, acquiring resources, and avoiding being shut down “in the wild.”

GPT-4-launch version is way more tamed. OpenAI reports the version we have access to is 82% less likely to respond to requests for disallowed content. GPT-4 generates toxic content 0.73% of the time, while GPT-3.5 does that 6.48% of the time.

OpenAI did not disclose any information about GPT-4’s architecture and size, hardware or training methods, citing “the competitive landscape and the safety implications of large-scale models”. All we know is that GPT-4 was pre-trained with data that cut off in September 2021 and the training finished in August 2022. After that, the model went through the fine-tuning and evaluation phase.

At the time I’m writing this, GPT-4 is available to ChatGPT Plus subscribers. The access via API is limited and you need to join a waitlist to access it. There are already services using GPT-4. The most notable of them is the new Bing and the new Microsoft Office 365 Copilot. GitHub introduced Copilot X and Duolingo introduced Duolingo Max, both of which are using GPT-4. We can expect more services and startups using GPT-4 to announce themselves soon.

🦾 More than a human

▶️ How neurotechnology could endanger human rights (14:10)

As the idea of having a device scanning and interacting with our brains becomes a reality, thanks to the recent advancements and investments in neurotech, the question arises what if this technology is used to harm people? How are these companies handle the privacy of our thoughts? Will we still have free will? And with these concerns in mind, how are we going to balance the medical benefits with potential downsides?

🧠 Artificial Intelligence

Introducing Microsoft 365 Copilot – your copilot for work

Microsoft announced Microsoft 365 Copilot - an AI-powered assistant integrated into Microsoft 365 and a new app called Business Chat.

“Today marks the next major step in the evolution of how we interact with computing, which will fundamentally change the way we work and unlock a new wave of productivity growth,” said Satya Nadella, CEO of Microsoft. “With our new copilot for work, we’re giving people more agency and making technology more accessible through the most universal interface — natural language.”

In the demo, Microsoft showed how Copilot can draft a Word document, create a slide deck and analyse a spreadsheet. It can also write emails, summarise email conversations and create transcripts from video calls.

Microsoft did not disclose when exactly Copilot will be available or the pricing.

Google’s Bard chatbot launches in US and UK

Bard, Google’s response to ChatGPT, has launched in the US and the UK. The bot is not publicly available yet (there is a waitlist) and is described by Jack Krawczyk, Bard’s product lead, as an “experiment”. “We feel like we’ve reached the limit of the testing phase of this experiment,” said Krawczyk, “and now we want to gradually begin to roll it out. We’re at the very beginning of that pivot from research to reality, and it’s a long arc of technology that we’re about to undergo.”

AI imager Midjourney v5 stuns with photorealistic images—and 5-fingered hands

Midjourney released a new version of its AI image generator. The improvements include the ability to generate images twice the size of the previous version, more realistic skin, eyes and lightning, a much wider stylistic range, and, most importantly, Midjourney now knows how to generate human hands correctly.

Should we automate the CEO?

A couple of years ago, Jack Ma, the founder of Alibaba, predicted that the job of a CEO will be fully automated in 30 years. Now, his prediction has been tested. Last August, NetDragon Websoft appointed an AI as its CEO. Since then, the company outperformed Hong Kong’s stock market. This article explores the possibility of having an AI as a boss and how AI assistants can automate many tasks done by CEOs.

Meet China's latest AI news anchor, a young woman who runs virtual Q&A sessions to teach people propaganda

Chinese state media outlet People's Daily has unveiled its digital news anchor, who'll be online 24/7. The anchor, a virtual young woman called Ren Xiaorong claims to harness the professional skills of "thousands of news anchors." As Business Insider reports, the bot only offers pre-programmed answers to a pre-selected set of questions. The users do not have the option to ask their own questions.

🤖 Robotics

▶️ Introducing Platform 2 - Zipline Keynote 2023 (40:55)

Zipline, probably the most successful drone delivery company, introduced its new drone delivery system - Platform 2. Platform 2 consists of two robots - the Zip and the Delivery Droid. Zip is a fixed-winged long-range drone that carries the Delivery Pod. Once it arrives at the destination, Zip hovers over the destination and lowers the Delivery Droid which does the actual delivery. Zipline promises Platform 2 to be a quiet, safe, precise and reliable method of drone delivery. Zipline also introduced an entire end-to-end experience that enables quick delivery of goods using Platform 2. Currently, Platform 2 is in the development phase. There is no information on when Platform 2 will start making deliveries.

Agility’s Latest Digit Robot Prepares for its First Job

Agility Robotics presents Digit V4 - a multipurpose bipedal human-like robot designed to work in warehouses alongside humans. This new version added a head for humans to better understand what the robot is doing and new hands. Agility Robotics plans to start shipping the robots to its partners in early 2024, followed by general availability the following year.

Humans (Mostly) Love Trash Robots

In this human-robot-interaction project, researchers made a pair of “autonomous” trash-collecting robots. The robots were very simple - they just attached a camera to a trash bin powered by a recycled hoverboard. Then they released the robots in a public square in New York. Researchers have found that people were mostly friendly towards robots and helped them when they got stuck. Another interesting outcome of the project was how people expect an autonomous robot to operate. Those trash bin robots were remotely operated so that was introducing some errors in their movement, which people perceived as a sign of them being controlled by a computer.

🧬 Biotechnology

▶️ George Church: Synthetic biology, 1 Million cell edits, & Woolly Mammoth (1:33:26)

Here is an interview with George Church - the "Founding Father of Genomics" and a pioneer in personal genomics and synthetic biology, where he discusses topics such as what development in biology in the last 5 years surprised him the most, applying software ideas to biology, rejuvenation therapies, applications of synthetic biology and his project to resurrect woolly mammoths. It’s a very interesting conversation, full of thought-provoking ideas.

Could Gene-Edited Hens Stop the Great Chicken Massacre?

A team of researchers have created genetically modified hens that lay eggs from which only females hatch. They have modified hens in such a way that a modified chromosome is only passed to male offspring. This chromosome contains a genetic kill switch that is inactive until the egg is exposed to blue light, which interrupts development so that the egg doesn’t hatch. This method could prevent the slaughter of millions of male chicks which have no economic value as they don’t lay eggs and are not useful for meat as they grow slower than hens.

3D Bioprinter Prints Tissue in Situ

Researchers have created a flexible 3D bioprinter that can deliver biomaterials directly onto the tissues or organs with a minimally invasive approach. After the first tests, researchers are optimistic about the future of the project and hope to see this device being used in hospitals within the next 5-7 years.

H+ Weekly is a free, weekly newsletter with the latest news and articles about AI, robotics, biotech and technologies that blur the line between humans and machines, delivered to your inbox every Friday.

Subscribe to H+ Weekly to support the newsletter under a Guardian/Wikipedia-style tipping model (everyone gets the same content but those who can pay for a subscription will support access for all).

You can follow H+ Weekly on Twitter and on LinkedIn.

Thank you for reading and see you next Friday!