Deepfakes: Nothing Is True, Everything Is Permitted

How did we get here, how they are used and what can we do about them

The history of deepfakes starts in 1997 with Video Rewrite, created by Christoph Bregler, Michele Covell, and Malcolm Slaney. It was the first-ever computer program to fully automate the process of facial re-animation.

The next big improvement in quality came in 2001 with the active appearance model.

Nothing much happened in the space until the introduction of generative adversarial networks (GANs) by Ian Goodfellow in 2014. GANs consist of two neural networks - one network is generating an image, and the second one evaluates the image. When an image is rejected by the second network, the first one learns from it and adjusts itself to make a better image next time. Repeat this process a couple thousand or million times, and you get an AI that can generate images almost indistinguishable from real ones.

The AI community took on the idea of GANs and started experimenting with them. In 2016, Face2Face has been released. One might put this as the beginning of the modern deepfakes. Face2Face was the first program to allow a real-time face swap in videos. It made waves in the AI community and inspired more research into face swaps and deepfakes.

The general public first learned about deepfakes in 2017 with this infamous Obama deepfake video from Buzzfeed. From there, the deepfake technology became better, cheaper and more accessible.

Like every technology, deepfakes can be used for good and for bad.

One good example is how BBC used deepfakes to hide the identities of Hong Kong protesters in their documentary.

Deepfakes found their use in the movie industry. A good example of that is Disney, which used old-school (before GANs) deepfake technology to bring back Peter Cushing (who died in 1994) to "play" Grand Moff Tarkin in Rogue One. The same technique was also used in the last scene of the movie to recreate young Carrie Fisher as Princess Leia.

And just to illustrate how good and accessible GAN-based deepfakes are, just three years later after Rogue One was released, one person was capable of creating a better deepfake Tarkin than the entire Industrial Light and Magic.

Deepfakes also found use in the internet’s favourite hobby - making memes.

These days, however, deepfakes are portrayed as a tool for misinformation and abuse. There are numerous examples of this. 4chan users used a deepfake voice service to make celebrities say racist, transphobic, and violent things. We have had fake Zelensky and fake Putin calling to surrender or offer peace talks respectively. And a well-known streamer got caught using a service offering deepfaked porn videos with female streamers.

It's not just famous who are the target of deepfakes. It is very easy to deepfake someone into revenge porn, put that on the internet and destroy someone's life and career. All that is needed are photos of the victim. That’s how low the barrier to entry is.

Voice can be deepfaked, too. Microsoft has an AI that can clone any voice from a short, 3-second-long sample. Just one voice note and someone can pretend to be your friend or family member. And this has already happened. Recently, the news broke of a scam using deepfaked voice of someone’s daughter in a fake kidnapping.

There was also a case of using deepfaked voice in a $35 million bank heist. And someone even cloned the voices of Drake and Weeknd to make a new unofficial song (the song was quickly removed from streaming services).

Large language models have been used in impersonation, too. A German magazine Die Aktuelle published an interview with Micheal Schumacher - a seven-time F1 world champion who has not been seen in public since his accident in 2013 - completely generated by an AI. Schumacher’s family is planning legal actions against the magazine.

A big problem is how easy it is to access this technology. You are just one Google search away from creating a deepfake and having the power to destroy someone’s life. And once a video of someone saying or doing things they did not say or do is on the internet, it will have a negative impact on that person’s life.

The question then is, how do we detect deepfakes? The best summary I've found is this paper published in February 2022 analysing different detection methods, from deep-learning-based to blockchain-based. Some of the examined methods report a 98% detection rate but are highly sensitive to the training data. With some tweaks, the detection rate goes to 99% and solves the sensitivity problem.

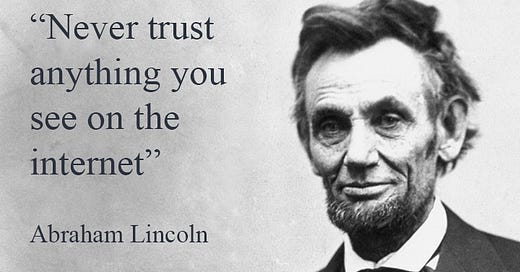

The battle between deepfake generators and deepfake detectors will the going on. What we can do in the meantime is to be sceptical about anything we see on the internet, and double-check if what we see is real.

I wrote a satire story a few months back where a deep fakes press conference with a deep fakes Biden saying all kinds of highly personal insults about Putin and making imminent missile launch threats. This is broadcast to Russia and sets off WWIII. From reading your article, this is an exaggeration and would probably not work, but still looks to be scarily technologically within our present capabilities. An epistemic crisis- a Cat. 5 hurricane of misuse is bearing down on us.