About the ChatGPT moment for robotics

Things might get very interesting in robotics in 2024 and beyond

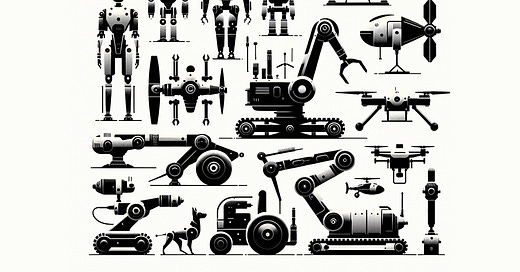

The new year brings a wave of predictions about what might happen in the next 12 months. 2024 is shaping up to be, just like last year, largely dominated by advancements in large language models and the pursuit of the ultimate goal of AI research: the creation of artificial general intelligence. However, we should also pay attention to another field—robotics. Researchers have made some amazing advancements in developing robotic agents and, according to some experts, the field is heading towards its own “ChatGPT moment”.

Let’s examine the recent advancements in robotics and if the field is indeed gearing up for its breakthrough moment in the coming years.

But first, what is a “ChatGPT moment”?

It might seem like ChatGPT appeared suddenly out of nowhere, but this is not the case. There were years of research and multiple breakthroughs, augmented by constantly improving and larger datasets as well as increasing computing power, that laid the groundwork for ChatGPT.

What OpenAI did with ChatGPT was to make their language models widely accessible, allowing anyone to interact with them easily. Before November 2022, people outside of OpenAI could engage with GPT models via the OpenAI Playground, but this platform wasn't as accessible and user-friendly as the chat interface. Once more people were able to interact with ChatGPT and see what it is capable of, people quickly discovered that there is an AI that can write poems and do other tasks at the same level if not better than humans can do. It could look like science fiction is becoming a reality.

What followed the release of ChatGPT was a massive increase in interest in artificial intelligence. Businesses were asking themselves not if but how to incorporate language models into their products or processes. New products and services have been created with language models at their core. And the money started to pour in from investors. ChatGPT and AI were one of the main topics of conversation in the news last year, for both good and bad reasons.

Therefore, the ChatGPT moment is not a technological breakthrough. It is how state-of-the-art technology is packaged and presented to the general public. It is about making the technology accessible, useful, easy to use and easy to understand what it can do. It needs to be a “wow moment” that captures the attention of the public by surprise and makes people think that this thing they seen in sci-fi stories is here.

Now that we know what a ChatGPT moment is, let’s see how it applies to robotics.

Smarter robotic agents

Robotics is one of the main research areas for Google DeepMind and a path to create truly multimodal models - models that not only can see and hear but can also touch and interact with the physical environment. “There's a lot of promise with applying these sort of foundation-type models to robotics, and we’re exploring that heavily,” said Demis Hassabis, CEO of Google DeepMind, in an interview with Wired.

Over the last year, DeepMind released multiple research projects in robotics. First was RoboCat - a self-improving AI agent designed for robotics. RoboCat is based on a multimodal model called Gato (“gato” means “cat” in Spanish, hence the name RoboCat) and can learn various tasks across different robotic arms. It can also improve its skills through self-generated training data.

A month later after RoboCat, DeepMind followed up with RT-2 (short for Robotic Transformer 2) - a vision-language-action (VLA) model that learns from both web and robotics data. RT-2 addresses one of the biggest problems in developing robotic agents - the lack of quality training data. Large language models like GPT-4 can be trained on sizeable chunks of the internet and that is enough for them. But that is not enough for robotics agents. RT-2 addresses this problem by combining web data with robotics data from its predecessor, RT-1. The result is a robotic agent that has improved generalization abilities and can perform complex tasks involving simple reasoning.

Just a week ago, DeepMind published a blog post introducing three more robotics projects - AutoRT, SARA-RT and RT-Trajectory.

AutoRT is an evolution of RT-1 and RT-2. It combines large language models, a visual language model (VLM), and a robot control model (RT-1 or RT-2) to create a robotic agent that can understand practical human goals. AutoRT uses VLM to understand its environment and the objects within sight. Next, the language model suggests a list of creative tasks that the robot could carry out, such as “Place the snack onto the countertop” and plays the role of decision-maker to select an appropriate task for the robot to carry out.

Self-Adaptive Robust Attention for Robotics Transformers (SARA-RT) enhances the RT models making them more efficient.

Last but not least, RT-Trajectory is a model developed to enhance robot learning by overlaying visual trajectories on training videos. It sketches the robot arm's movements in 2D, providing practical visual hints for learning robot-control policies. It bridges the gap between abstract instructions and specific movements, and can also interpret human demonstrations or hand-drawn sketches.

Nvidia is another big player experimenting with robotic agents and applying language models to robotics with projects such as PerAct, VIMA and RVT. Each of these projects explores how multimodal language models can be applied in robotics.

OpenAI, the poster child of the current AI revolution, is not involved in any robotics research projects. But they had done some in the past. The only notable result that came from OpenAI’s research into robotics was Dactyl, a system that trains a robotic hand to manipulate physical objects with high dexterity. However, nothing new has been heard from OpenAI in robotics in years and it seems the company has abandoned robotics research in favour of focusing on large language models.

If you're enjoying the insights and perspectives shared in the Humanity Redefined newsletter, why not spread the word?

Better dataset and virtual playgrounds for robots

Having smart robotics agents is just one piece of a larger puzzle. These agents need high-quality datasets to begin their training. However, there are not that many datasets designed for robotics. Right now, there are over 93,000 datasets available on Hugging Face, ranging from text and images to audio and video. Out of all these datasets, only 12 of them are in the Robotics category.

There are initiatives to change that. One of them is Open X-Embodiment, launched by Google DeepMind last year, whose goal is to provide a collection of all open-sourced robotic data in the same unified format to advance robotics research.

Another method for training robots is to use virtual environments. Researchers can make a 3d model of their robot (its digital twin) and have it interact with the virtual environment over and over again until it learns the required skills. This can condense years of learning by trial and error in real life to days if not hours in a virtual world. The digital twin of the robot can learn the skill on its own or it can learn from humans. Researchers from Nvidia showed with MimicGen how the latter approach can work. A human gives 10 samples of how a task needs to be completed. From these samples, MinicGen then generated a dataset containing thousands of variations of that task for the agent to learn from. Once the digital twin learns what it needs to learn, it then can be uploaded to a real robot for further tests.

If you enjoy this post, please click the ❤️ button or share it.

It’s all coming together

Today, we are witnessing a convergence of multiple trends in robotics. We have better robotic agents with growing capabilities, along with an increasing quality of robotics datasets, and more sophisticated virtual environments for these agents to train in. The hardware is advancing as well. Engineers and roboticists now have access to powerful and compact computers that can fit inside a robot, enabling the processing of all the data coming from cameras and sensors.

What is happening right now in robotics is similar to where large language models were just a couple of years ago. But can robotics have its own ChatGPT moment two to three years from now? I think it is possible. I’m confident that we will see many breakthroughs but the question is what will be the killer app for robotics, just like ChatGPT was the killer app for large language models. What application of robotics could take the public by surprise and make people think that the sci-fi future is here?

This breakthrough moment for robotics might come in the form of commercial humanoid robots. Many companies currently developing these robots promise to release them within the next 2-3 years. Building a humanoid robot is one of the biggest goals in robotics, similar to how creating true artificial general intelligence is the goal of AI research. The vision of a humanoid robot performing household chores, the promised for years robot-butler, could be the ChatGPT moment for robotics. Or it could be a group of humanoid robots entering an assembly line or a warehouse and doing the work nearly as well as humans do.

We have seen some glimpses of that in recent days. Just a few days ago, Brett Adcock of Figure, one of the companies working on commercial humanoid robots, posted a video showing their robot making coffee using a coffee machine. A few days before that, researchers from Stanford University revealed Mobile ALOHA, a mobile robot that can cook dinner and do other household chores.

These two projects did make waves in the tech community but I don’t see them breaking into general news. They don’t have the “wow factor” that makes everyone outside the tech talk about them. However, they show what is possible at the convergence of the trends we discussed in this article. Give it some time and we might see these projects as a stepping stone towards something greater.

The future looks exciting for robotics. The robotic agents are getting smarter and the ecosystem for training these agents is advancing as well. All of these advancements are happening just as the first generation of humanoid robots is around the corner. Time will tell if this is enough to cause the ChatGPT moment in robotics.

Thanks for reading. If you enjoyed this post, please click the ❤️ button or share it.

Humanity Redefined sheds light on the bleeding edge of technology and how advancements in AI, robotics, and biotech can usher in abundance, expand humanity's horizons, and redefine what it means to be human.

A big thank you to my paid subscribers, to my Patrons: whmr, Florian, dux, Eric, Preppikoma and Andrew, and to everyone who supports my work on Ko-Fi. Thank you for the support!

I believe that by 2030, robots that can do housework will enter many people's homes.